How to build trust in your AI product

Whether it's added to please investors, attract funding or because it actually makes sense, AI is becoming a bigger part of digital experiences.

Still, even if businesses are on board with it, users are still catching on. Developing trust in AI products is a challenge, especially when there are a lot of products which cater to very broad needs -- like Chat GPT, for example.

What's more, recent technical developments for LLMs have significantly driven down the costs that would be needed to make AI part of a product. The cost of tokens has gone down by more than 50% since November 2022. This makes it relatively easy to integrate AI into different products, and it also makes it relatively easy to create AI products.

With all this in mind, one of the big tasks of any AI product team is to make sure they're building trust in their products. While this is mandatory for any products, AI products come with some extra things to keep in mind to build a long lasting relationship with users.

Keep on reading for our suggestions on how to build trust in your AI products and what you should keep in mind.

1. Deliver on your product promise

There's no quicker way to lose trust with users than underdelivering on what you're promising them. Put very briefly, your product has to do what it says it will do. That should be the standard. It should also do it relatively well. Otherwise, users don't really have a reason to use your product.

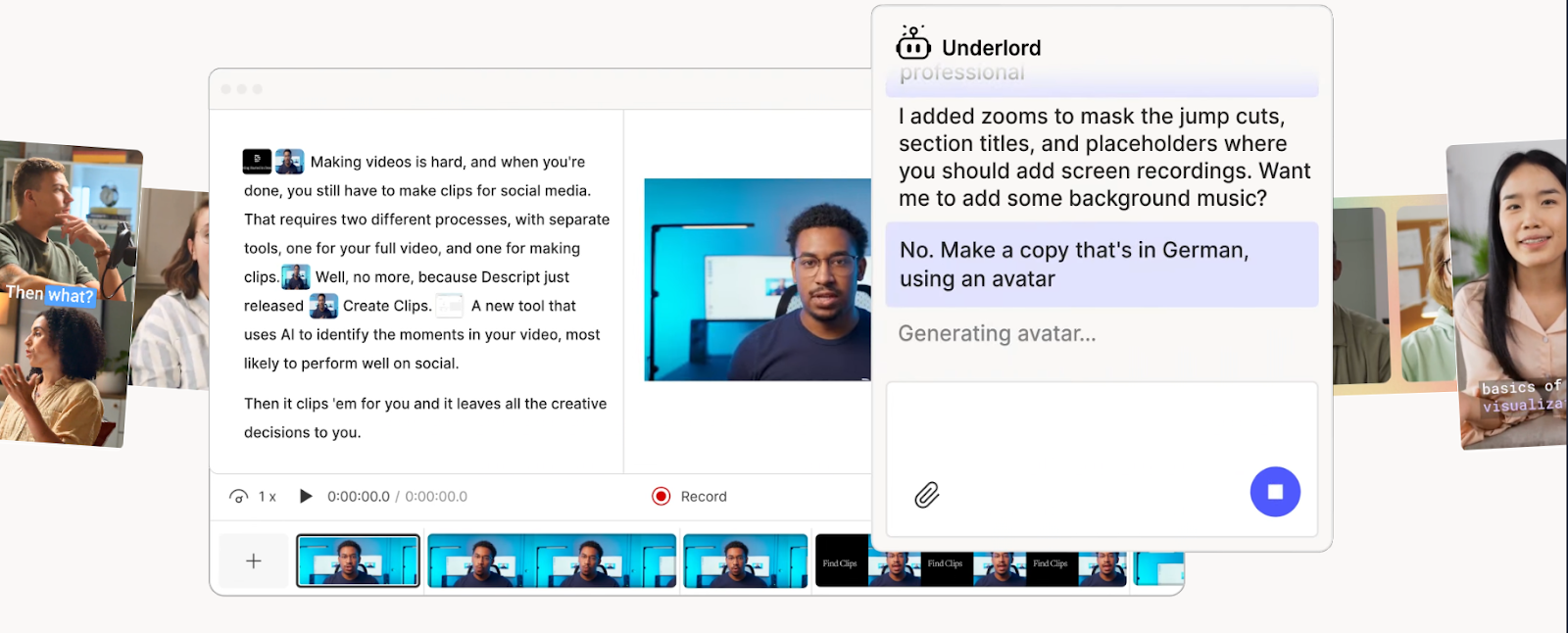

One great example in this case is the Google Notebook or Descript. Even before LLMs became popular, Descript was one of the go-to tools for podcasters for editing. The tool made it easy to edit podcasts by eliminating a lot of emms and ughs that come up in conversations. In addition, several Descript alternatives offer similar functionality and help creators streamline their podcast editing workflow.

On a similar note, Google Notebook can now generate a full podcast from a few articles or just from some notes, without creators having to intensively edit the material.

Another great example is Gamma. This AI powered presentation generator is one of the most popular tools today as well, helping users to create immersive presentations and slides. Midjourney stands out as well as one of the industry standards when it comes to image generation.

2. Collect and use data responsibly

While this applies more to predictive AI tools, it can be applied to generative AI tools as well. For predictive AI tools where the output depends heavily on various types of user data as input, this is a very delicate subject. Additionally, personalisation — one of the key applications of AI products — works best when there's a sufficient amount of data available, so the experience is actually personalised and relevant.

Make sure you're telling your users upfront what data you're collecting from them and how you're using it. While in Europe this is partly covered by GDPR, in other parts of the world users don't benefit from the same policies. Also, in building your product, it's important that only the people who need access to the data have access to it to prevent misuse or leaking information. Implementing strong internal security practices, such as using a secure password manager, can help teams protect sensitive data, control access credentials, and reduce the risk of unauthorized exposure, reinforcing user trust in AI-driven products.

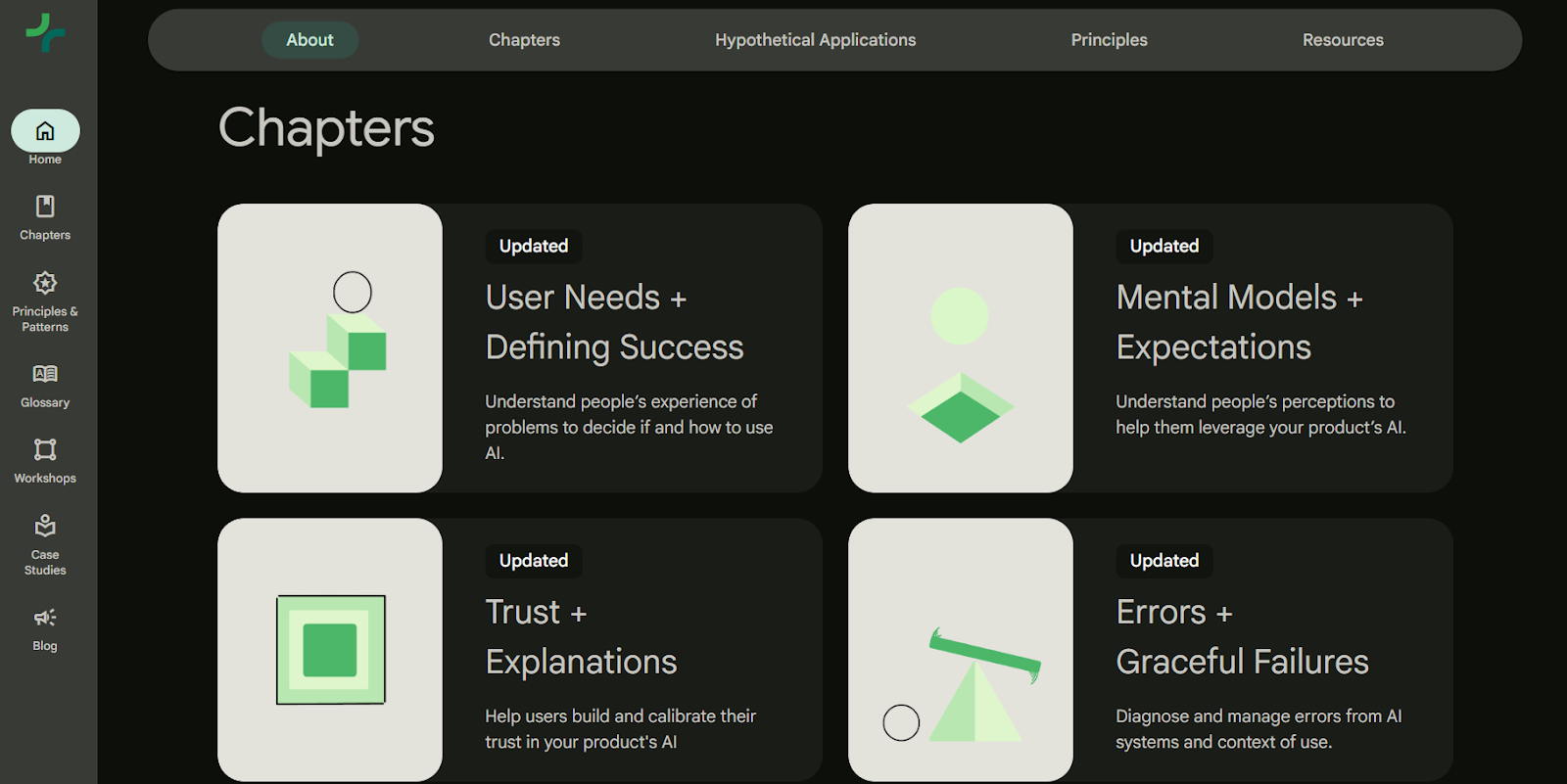

We've linked it before, we'll link it here again now. The Google PAIR guidebook has an excellent resource regarding practices and policies for data collection and usage.

This point refers both to data used to train AI products and that you collect from users for AI products. There have been numerous discussions about copyright when it comes to generative AI output, and there are several ongoing lawsuits regarding this. On the surface, this doesn't seem to affect how people feel about LLMs. However, trust and ethics go hand in hand. It's hard to trust a product that's built on unethical practices, especially when it comes to data manipulation.

Notion is also a great example in this case - they've built a public-facing document detailing how they use data when it comes to AI.

3. Constantly evaluate your AI performance

One of the defining characteristics of AI is that its performance can change over time. Even without any new training data or without updates, an AI product's output and performance can undergo variations in time. If the quality of the output goes down significantly, users will be left wondering what happened and why it isn't working as well as it once was. This, in turn, affects reliability and trust in your AI product.

To avoid this situation, it's important to constantly monitor the AI features or parts in your product. While this can raise some difficulty, it's an investment worth making as it affects long term use for your products. It's much harder to keep up a product that's constantly losing users.

There are quite a few resources on how to do this. We liked Datadog's how to build an LLM evaluation framework and this short guide for product managers.

4. Track & manage errors

We've said it before. AI, and especially generative AI products provide non-deterministic experiences. What this means is that even with the same input, the output can be very different because of various reasons. Partly because of this, errors and unexpected situations are not and if, they're just a matter of when. Errors or unexpected results are bound to happen.

The responsibility of each product team is to anticipate these errors as much as possible. Of course, it's hard to predict anything and everything that can go wrong. But some situations are easy to predict just by thinking of the opposite, or by asking yourself what a bad output looks like.

Simply leaving this issue open and saying, “well, that's just how AI works” is not good enough.

Once AI is introduced within a product, it becomes the team's responsibility to make sure it works properly and that there are mitigation plans in place for when things don't go as expected.

Whenever errors happen in an AI product and users are left wondering what to do, this can affect their trust in the product. One way to prevent this and make sure your product is trustworthy is to make sure you're considering different ways in which the output can be different from what the users would expect or from what they would need.

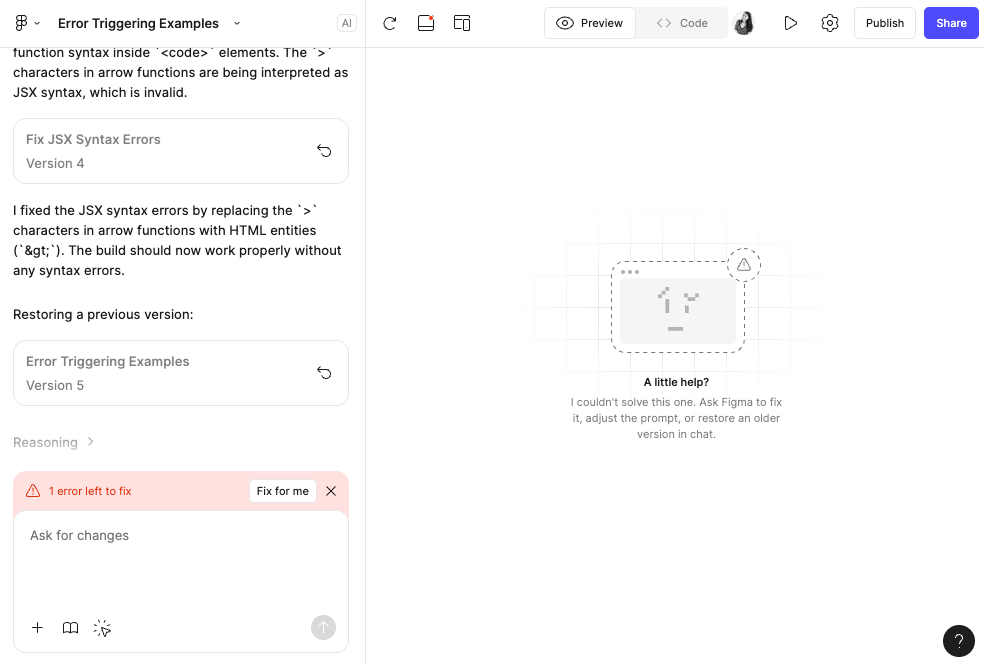

Figma Make is a good example here, guiding the user on what they need to do to move forward once an error occurred. Also, it offers the option to fix it for them.

5. Give users control

This is also part of building a great AI experience, but it helps to build trust as well. While there's a broad spectrum of user input whether it's automatic experiences or agentic products that require various amounts of human input, you should provide users with some degree of control within your product.

Why is this important for building trust? When the output doesn't match users expectations, unless users have a way to move forward in your product or unless they have some tools to revisit the output, they will feel stuck and abandoned inside the product. And that's a pretty quick way to lose trust in your product. If your users are stuck with an output they don't need or is not relevant for them. The simplest example here would be the Regenerate option available in almost all LLM apps today.

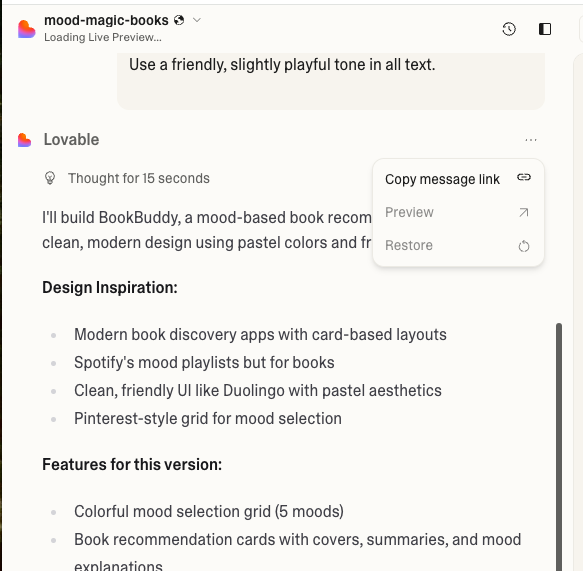

Another example is Lovable's option to restore a specific output. This allows users to go back to an earlier version if the subsequent iterations don't meet expectations.

Additionally, if an undesirable output gets in the way of users achieving their goals, the situation becomes even more serious.

To avoid this, make sure you're giving users some control over the AI experience so they can move forward even if something goes wrong. Moreover, it would be important to make sure you're telling users upfront what they need to do and what the product can help them with. This brings us right to our next point, which is...

6. Talk like a human, not like a computer

One easy way to lose trust is to talk in a way in which your users can't relate to or they don't understand. Throughout your product, from the onboarding to error messaging, make sure your UX writing is clear, simple and uses a language that doesn't require special knowledge from your users. The more technical you are in explaining how your product works, what it does and how it can help them, the less they will know and understand it; you cannot really trust something you don't understand.

There are a few things to keep in mind here:

- Simple, clear, concise language. Skip the jargon, keep it simple and clear.

- Mention user benefits. Don't explain the product features, tell users how it benefits them. For users, it doesn't make any difference whether it's a product that uses ChatGPT o3 or Claude Sonnet 4. What makes a difference for them is communicating how this helps them clearly.

- Use plain language. Especially if you're coming from a technical background, the temptation to put everything in technical terms is there. However, try to put yourself in your users' shoes and consider how much they would understand from your messaging without the technical background.

- Provide relevant information. When an error happens, try to tell users why it's happening. Just telling them it's an error without letting them know what went wrong will likely cause the situation to happen again, leaving users frustrated and affecting their trust.

Clear, simple, easy-to-understand messaging is one of the easiest ways in which you can build trust in any product. And it's even more important in AI products since these sometimes work like black boxes, causing even developers to wonder why they're behaving in a certain way.

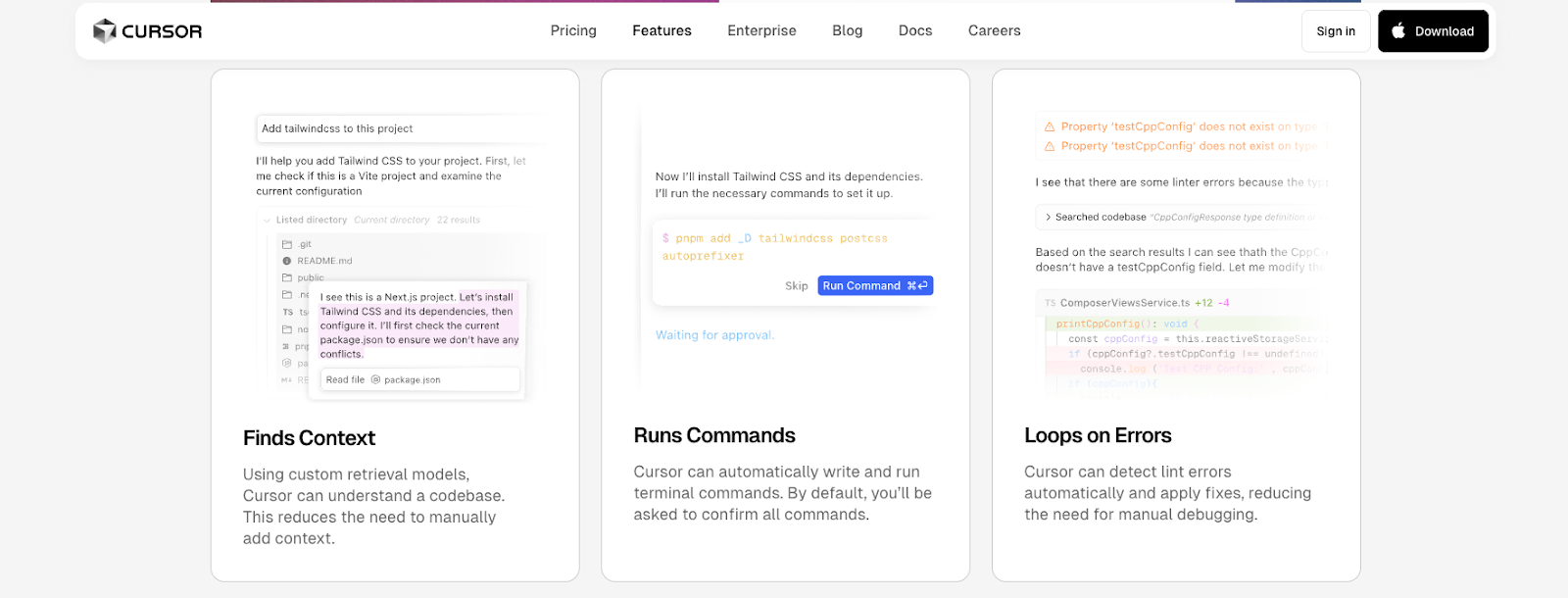

Cursor does a great job with this, explaining their product features in a way that's easy even for non-technical users to understand.

7. Build guardrails against misuse.

Generative AI products, and especially image and video generation, are very easy to abuse. Also, they can be used to cause harm to other people or for negative purposes. While this might not harm product use, it will harm its reputation in the long run, and, in turn, the trust people have in it. Ultimately, AI products should be used for creating better things and for improvements, not for negative purposes.

At some point, OpenAI had considered this and there was a markup in the image generated with ChatGPT to let others know that it was a generated image.

To summarise

Trust is an important topic, even more for AI products. Make sure to follow these steps to set up a good, honest, transparent connection with your users.