Tackling user activation on Ranking Raccoon: Challenges and lessons learned

Ranking Raccoon is a community-based platform built by UX studio for connecting ethical marketers & SEOs for building quality links.

After signing up, users register their first site, which then undergoes a brief, manual quality check. Only once their site has been approved can they get in touch with other site admins and start chats to discuss link collaborations. While users appreciate the friction necessary to establish quality over quantity, we observed its negative impact on user activation.

Also, we quickly realized that activation challenges went beyond the initial hurdle: many users with approved sites simply weren't activating, pointing to deeper, less obvious problems. This pushed us to roll up our sleeves and tackle user activation.

.png)

Unpacking the problem

Imagine inviting someone to a vibrant party and once they get there, no one is talking. This represents how low engagement hurts a community-based product: users can't get what they expect out of it. On Ranking Raccoon, if users are not talking to each other, they are, consequently, not building links, which is a major threat to our core value proposition: the entire platform is for link building.

We knew we had a problem, but initially we didn't grasp its full scale. To truly understand it, we first needed to align on our definition of user activation within the context of the product: what does it mean for a Ranking Raccoon user to get activated?

We decided to focus on the first action a user can take that may lead them towards the happy path of getting a link: starting a chat with another site admin by sending their first link request.

And, after having that clear understanding, we started digging into available data. Our event-based analysis revealed that our average activation rate was as low as 27%. This meant that only around a quarter of new users started a chat with another site admin within a week from getting their site approved.

The goal: defining success

With a clear picture about the problem, we knew we had to act on it. We first needed to define what success would look like for us. This alignment was crucial for objectively assessing our efforts and ensuring we were going in the right direction.

We agreed that at least half of the new users should initiate one new conversation within their first week after the site was approved. This led us to set an ambitious goal of 60% weekly activation rate. In other words, we aimed for 60% of new users to send their first link request within seven days.

We also recognized the broader impact this could have. By boosting activation, we could significantly improve user retention as well, as users with ongoing conversations would naturally be more likely to return to the platform.

Identifying core issues

After acknowledging the problem's existence and its significant impact, our first step toward effective solutions was to find out exactly why it was happening in the first place. We had many assumptions, but only real-world data could truly help us understand the situation.

We collected data from multiple sources: Mixpanel events and funnels, usability tests, surveys, email campaign open rates, and session recordings. Just like our own initial assumptions, many possibilities emerged regarding where the challenge, or challenges, might be rooted.

1. Lengthy signup and onboarding

Getting started on Ranking Raccoon was a significant commitment for new users. Besides typical account setup, our quality-first approach required several verification steps: users had to verify their LinkedIn profile and provide detailed site data for our manual review process. This made the signup and early onboarding journey inherently lengthy and high-effort.

We saw a clear opportunity to make this early experience smoother. However, we weren't willing to compromise our careful verifications. They were crucial for establishing trust in this spam-prone industry, and losing that trust was not an option for us.

2. The “waiting game” drop-off

Our manual site verification, an essential part of our quality checks, typically takes one business day. During this "waiting game," users couldn't send link requests or interact with other site admins. Consequently, most users would just close the site at this point.

The impact was clear: a significant 30% churn rate. Despite their sites being approved and receiving email notifications, nearly a third of our users simply never returned to the platform. Whether they forgot, lost interest, or got sidetracked, this was a major problem for us.

So, we started asking: how could we engage users during this crucial waiting period, instead of letting them just wait passively?

3. Lack of guidance

With products like Ranking Raccoon which are community-based and work similarly to marketplaces, the real-world scenario often differs significantly from Figma-designed prototypes. Because of this, we decided to run usability tests with the actual product, getting people to sign up on a call with us and capturing their unfiltered reactions.

The very first insight from these tests was clear: users missed guidance on how to get started. The list of sites felt overwhelming, leaving them unsure how to narrow it down. They weren't sure what to focus on first: Metrics? Topic relevance? Admin activity? What criteria should they even filter for?

One user's feedback represents this sentiment very well: “What would the platform recommend? (...) There's nothing sort of suggesting who I should prioritize". This led us to consider guiding users and providing more curation in their experience.

4. Limited relevant opportunities

The second key finding from our tests was: for sites in specific niches, our network simply wasn't relevant or broad enough. Users struggled to find good matches within their exact niche. For example, we have sites as specific as a fishing bait business and a site about mushrooms.

Beyond that, we realized we weren't helping users discover opportunities in less obvious places. For instance, a mushroom website might find a highly relevant link placement in a travel blog featuring an article on mushroom hunting locations. This kind of nuanced opportunity was almost impossible to discover with the product in its current state.

We drew two clear action points from this: first, we needed to significantly grow the number of sites on Ranking Raccoon; and second, we had to help users uncover any type of potential opportunities, even the less obvious ones.

How we solved it

After collecting all our insights on the issues and action points, we brainstormed potential fixes and decided on a set of solutions to tackle user activation. Over two dedicated quarters, we developed product changes and features focused on four key areas.

Better guidance and enhanced relevance

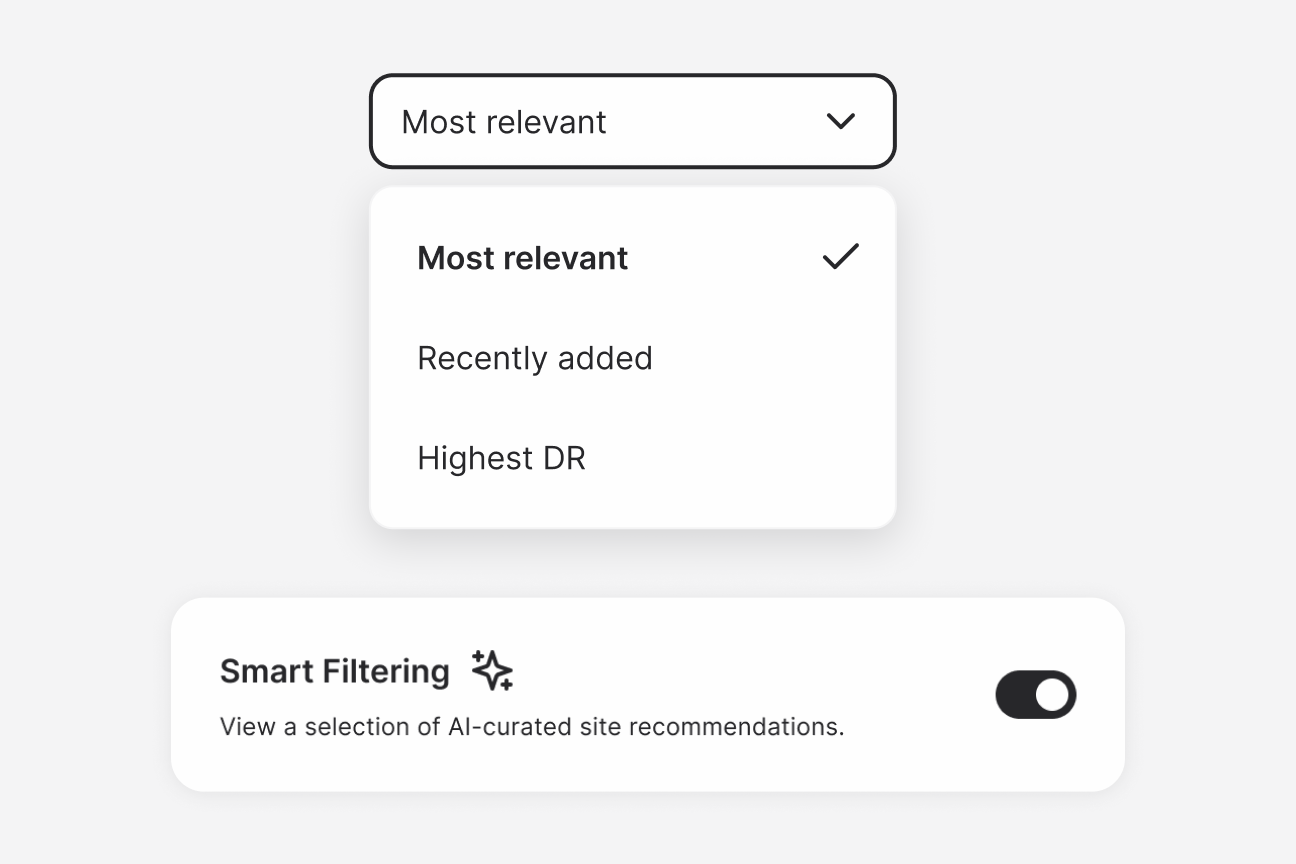

Relevance-based sorting & filtering

We saw a clear opportunity to leverage AI to help users find their ideal matches. Through AI, we started calculating how similar sites on the platform were to each other. This allowed us to make an estimation of which sites were most relevant for each user.

Based on this insight, we provided users with a new way to sort their site list by relevance, ensuring the most relevant options appeared at the top. We also introduced a 'Smart Filtering' option, which automatically hid sites below a certain relevance threshold, making the search more efficient and proactively narrowing down the list for users.

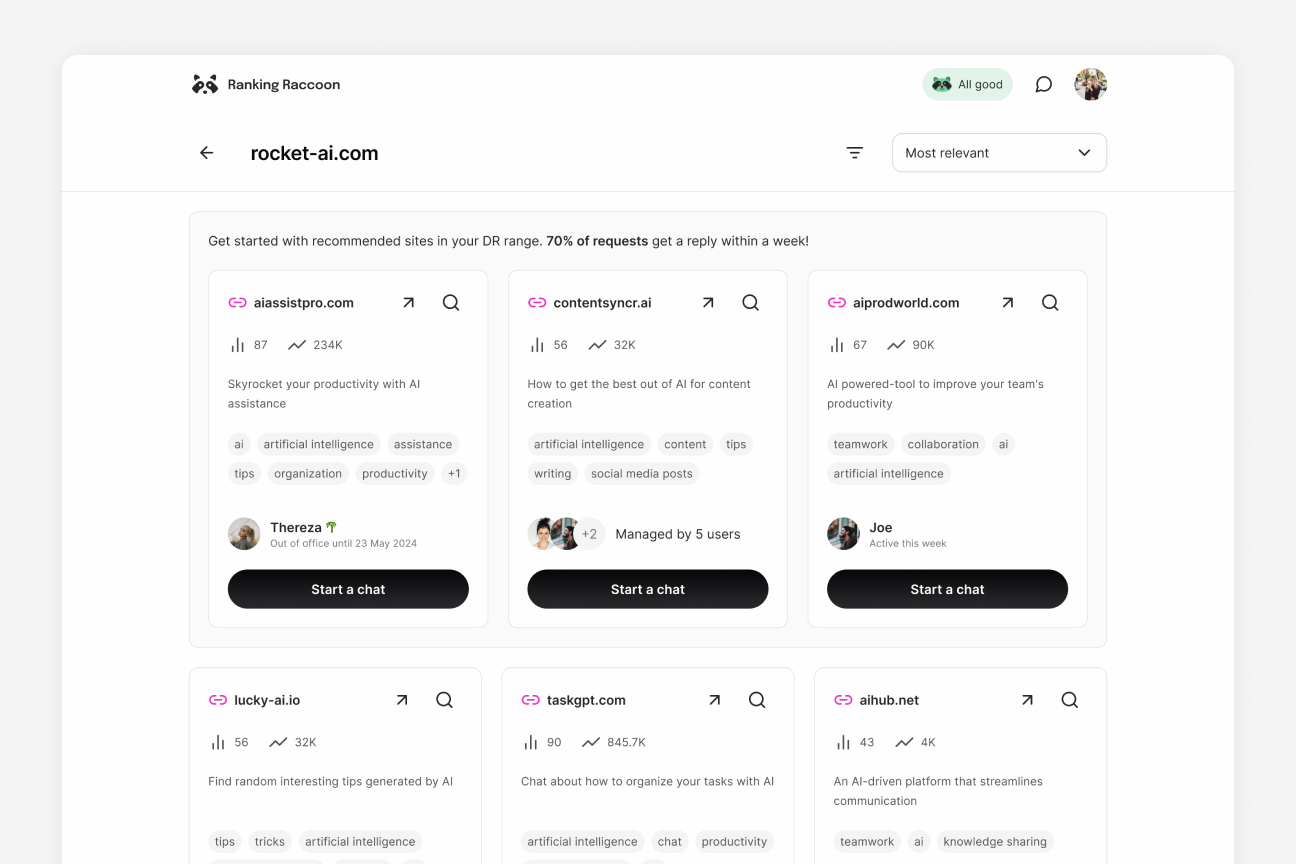

Relevance-based recommendations

Building on our relevance calculations, we introduced an additional factor: Domain Rating (DR) range. We started highlighting the top 3 most relevant sites in users’ DR range, aiming to help them get started faster. These recommendations were also sent via email to boost our welcome campaign.

Our decision to include DR range was based on product usage data and user interviews, which indicated that site admins are generally more willing to link to other sites in a similar or higher DR range than theirs. This approach not only helps users start chats but also increases their chances of a successful collaboration.

To track the effectiveness of these relevance-based efforts, we set up Mixpanel funnels. We monitored new users from site approval to seeing recommendations, and checked how many would start a chat directly from those. The overall conversion from viewing recommended sites to sending a request was around 25%.

Increasing opportunities and discoverability

1-click site addition & partner sites

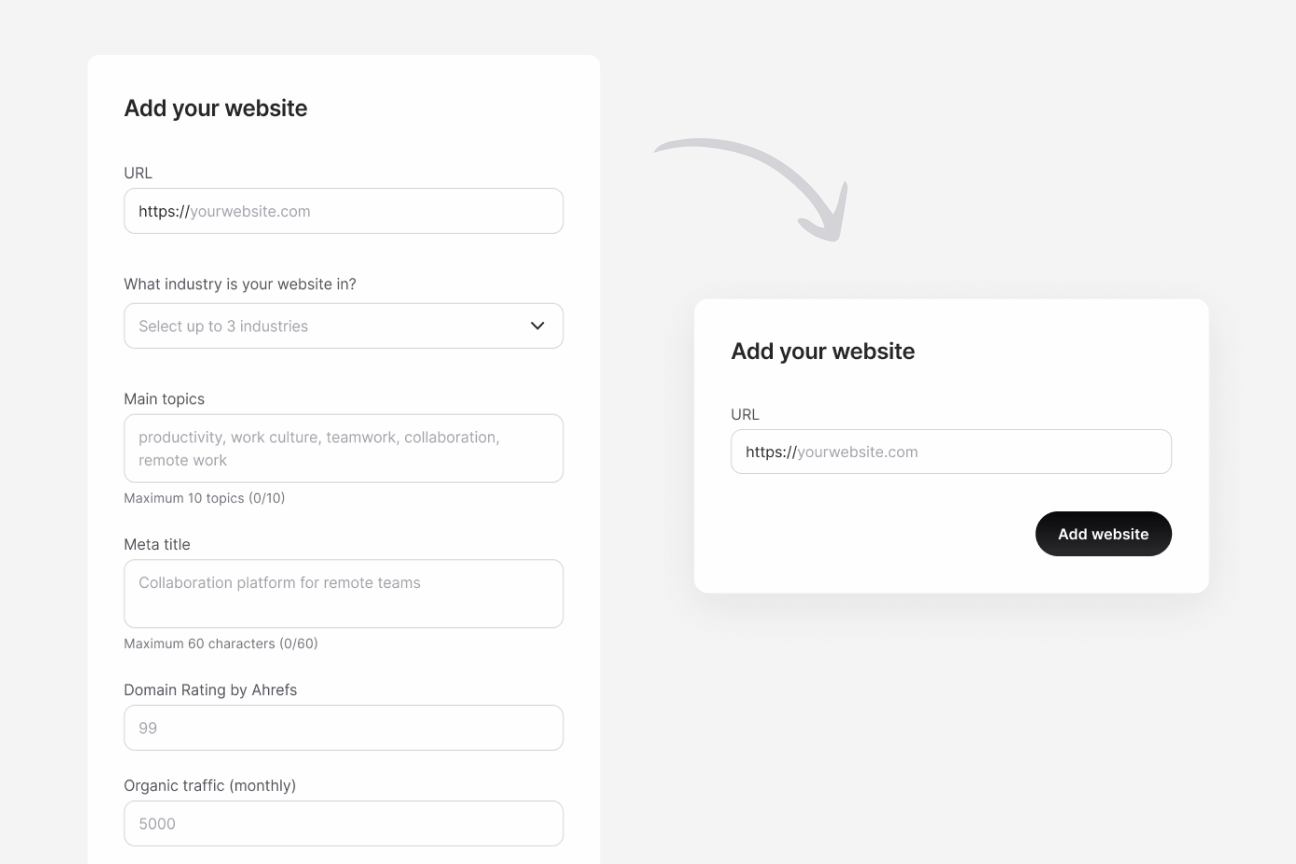

Knowing we didn't have enough sites for users in specific niches to find good matches, it was clear we needed to make adding sites more seamless. We knew from interviews that many of our users work with multiple sites, yet we noticed they weren't adding all of them to their profiles. So, we started by researching why.

First, we realized our existing site addition process involved a significant amount of work: users had to manually provide meta titles, topics, industry, and site metrics. Due to having recently worked with AI, we saw another opportunity to bring it in to collect this data for us.

We started scraping sites, and AI would structure all the necessary information. We managed to streamline site addition to a single step: inserting a URL. We also introduced a "bulk adding" option, allowing users to import multiple sites at once via a spreadsheet.

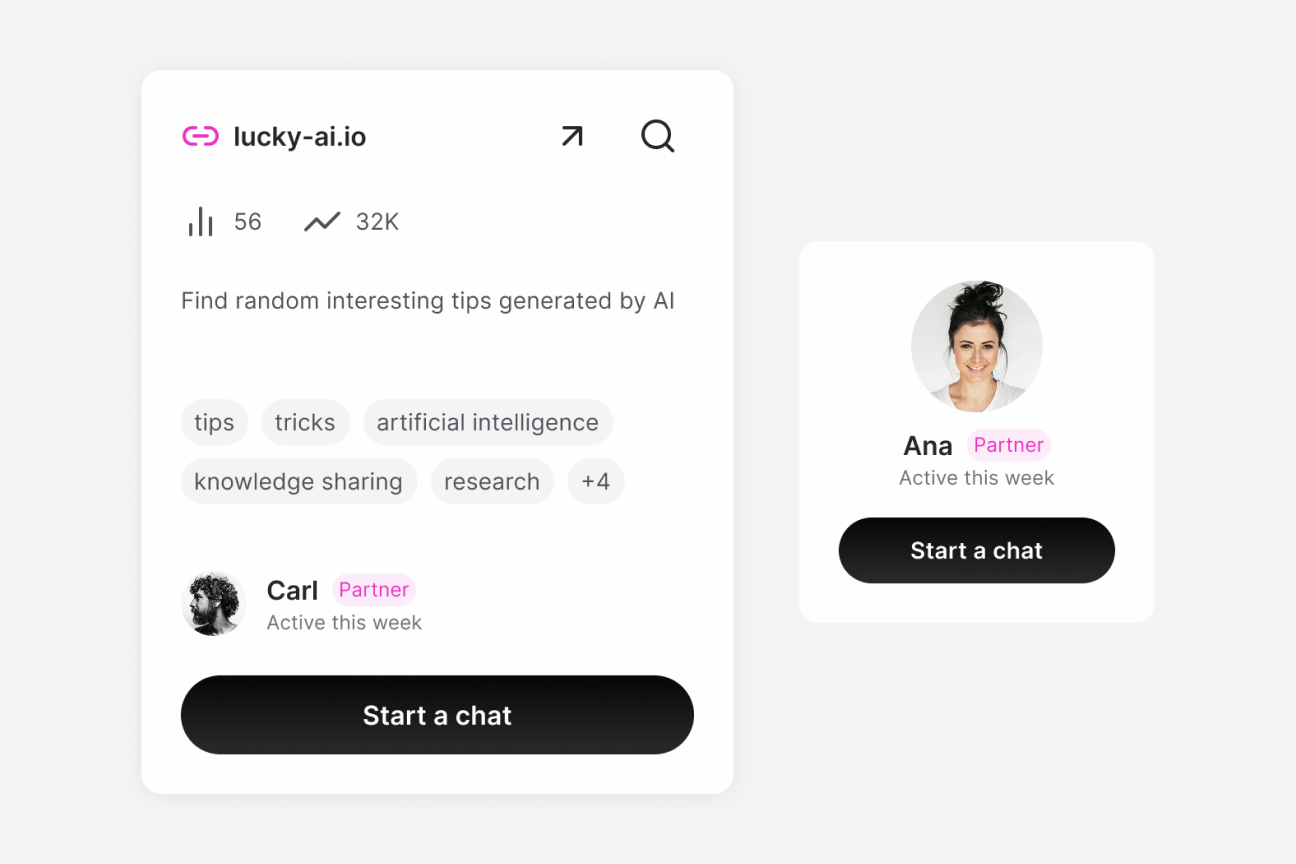

Moreover, we ran a survey with users who listed unregistered sites in their messages, which revealed a key reason for not adding all their sites: they didn't feel comfortable adding partner sites on their profile, because they felt they were claiming ownership they didn't have.

To address this, we started allowing users to mark sites as "partner sites." This made the information transparent upfront, letting others know these weren't directly owned sites and might require a bit more back-and-forth for publishing links.

These efforts led to a 100% increase in new sites added from one quarter to the next. Indirectly, it also helped reduce the drop-off rate by 5% on the signup step where users had to add their first site.

Pages search

For less obvious opportunities, we implemented a feature that allowed users to search each site for their target keyword without leaving Ranking Raccoon. This feature would return pages and articles on that site that mention the keyword of interest, helping users get to potential link placements much faster.

Later, we expanded this functionality into a dedicated tab on the recommendations page. This allowed users to search for their target keyword across all sites on the platform. Here, we focused the results on the top sites most relevant for that keyword, listing the specific pages that mentioned it.

The search tab received a lot of love in live sessions we had with our users. Most told us they'd prefer this over browsing the list of sites, as it significantly reduced the steps of opening each site and manually looking for relevant placements.

One user pointed this out exactly: “Oh wow, that’s really cool, so I’m not manually searching through each site!”. Another shared: “I’ll use the pages tabs more - it’s a better overview of the exact pages and sites I need.”

Streamlining the user journey

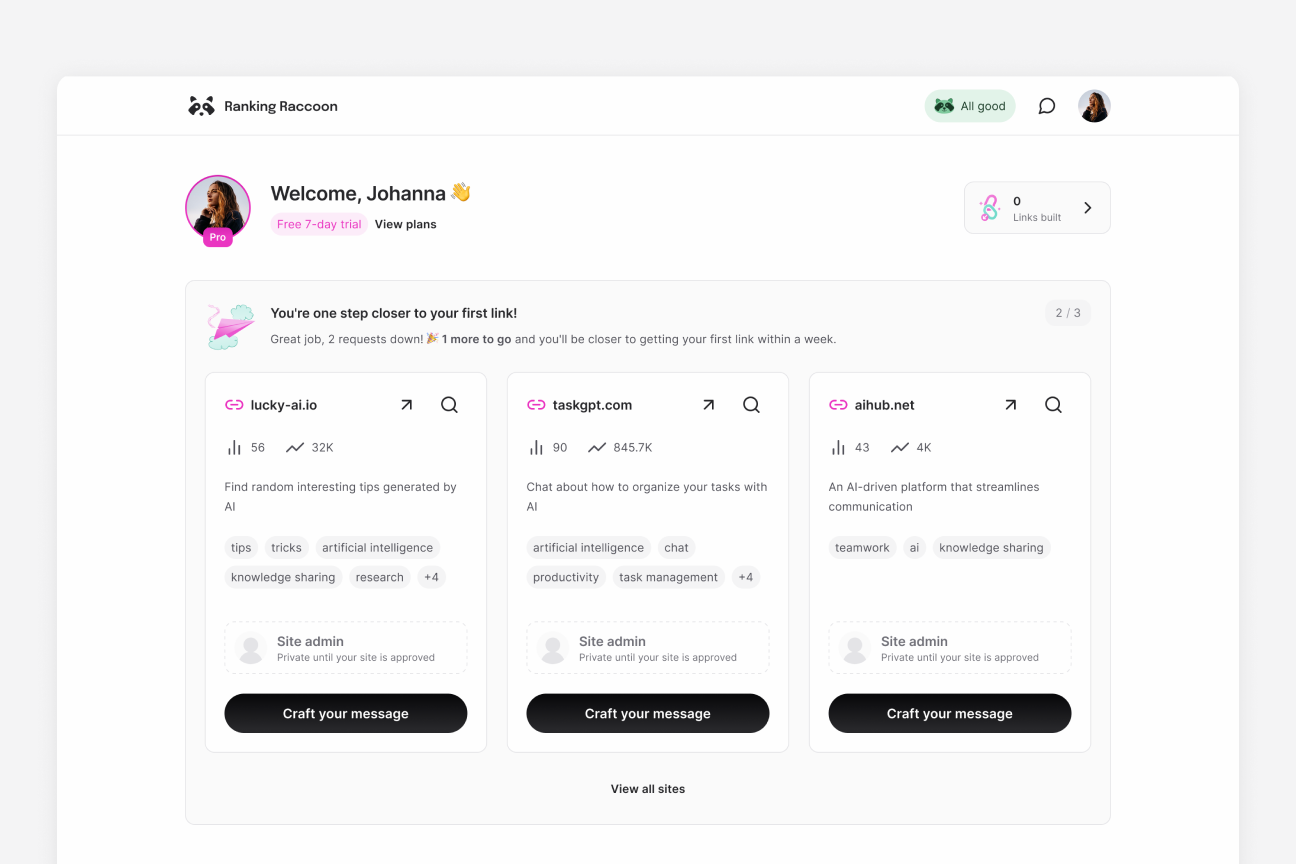

Homepage recommendations

Previously, users landing on their homepage faced an extra step. Before they could even browse sites, they first had to go to their list of sites and click a 'Build links' on one of them to reach the recommendations.

To shorten the journey toward the first request sent, we decided to bring site recommendations forward. We integrated a banner on the homepage, highlighting the top 3 recommended sites (combining relevance and DR range) and prompting users to get started right away.

We created a dedicated Mixpanel funnel to track requests sent from this new entry point. The overall conversion from viewing these homepage recommendations to sending a request was around 12%, which was about half of the conversion rate we saw from the site highlights on the recommendations page itself.

AI message draft

When users contact another site admin, they start by filling out a message field in a form. While we offered tips in various ways on how to approach someone for link building, it could still feel quite intimidating to initiate a direct chat, especially compared to the familiar practice of emailing.

So, we brought in an AI design solution again, this time to craft a personalized message draft. The draft incorporates data about both users' sites and highlights their overlaps, stating why a collaboration would be a good idea.

Although some users prefer to edit and give the message their own personal touch, many others appreciate the efficiency of the draft and how it's personalized, not just a generic template.

Engaging users during waiting period

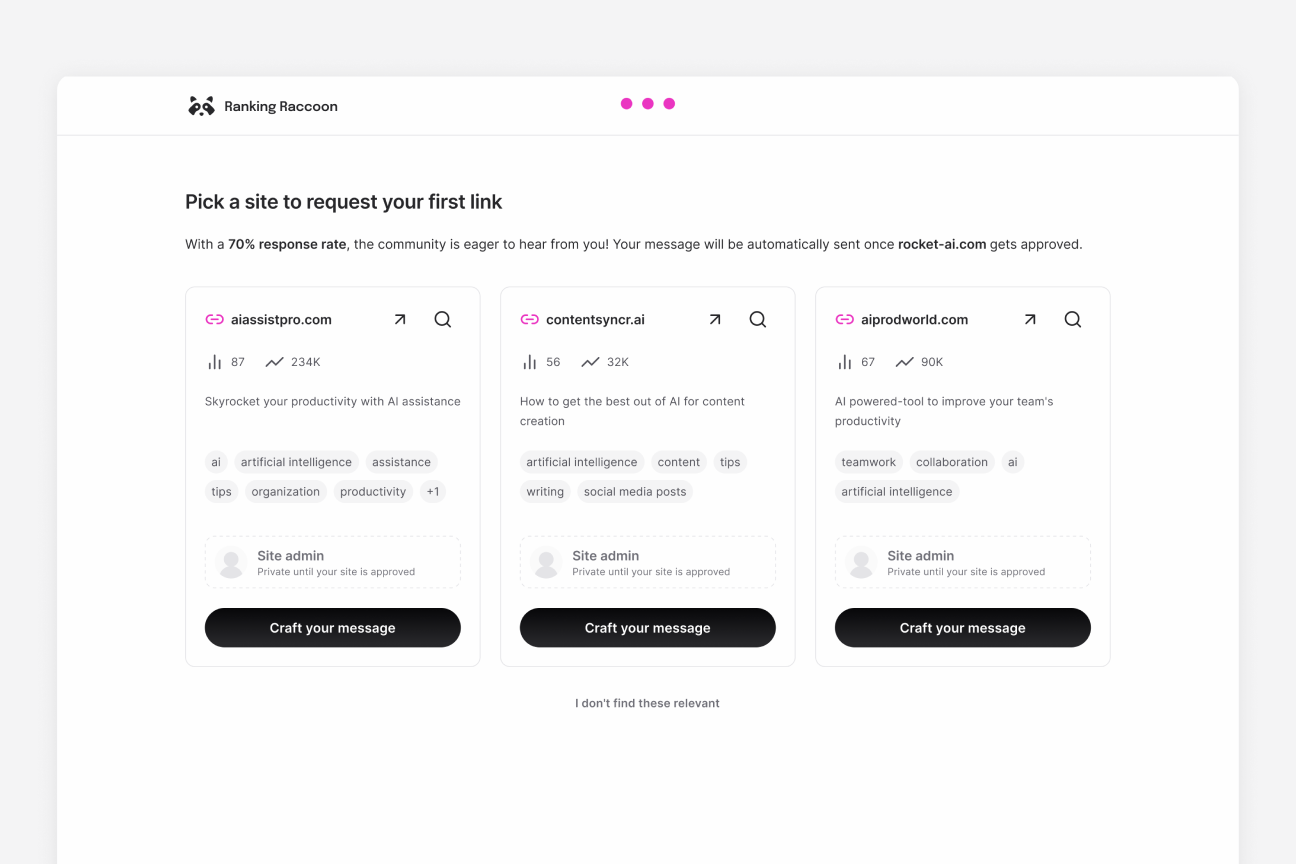

Sending pending requests on signup

To tackle the crucial 1 business day site review wait, we introduced a focused step right after the current signup process. Here, we showed new users the top 3 most relevant sites, prompting them to craft their very first request right away.

To protect existing users and maintain a trustworthy environment, this message is only sent (automatically) after their site is approved. And until then, users can't view the identity of the person they're contacting.

We tracked requests sent on signup with a dedicated Mixpanel funnel, which showed an impressive 35% conversion rate, making it one of our most successful activation initiatives. Once their site is approved, users not only have an ongoing conversation, but they might even have a reply waiting for them, significantly speeding up their journey toward getting a link.

The outcome and challenges

Zooming out of our actions, you might ask: did all of this work? What's the overall impact of these UX efforts?

We continuously watched our weekly activation rate. After releasing most of the changes and features, we reached an impressive 70%. However, we weren't able to sustain such a high level, and for much of the time, we remained below our 60% goal.

Many challenges made it difficult to assess the effectiveness of our efforts and to reach that goal itself. To start, because we were tracking the journey of new users, and our influx of new users wasn't very high, it took us quite a long time to properly understand how effective something was. We had to wait for new users to trickle in and interact.

This low volume of new users each week led to high data volatility when tracking our activation rate, making us less confident about any variations. We saw drastic drops or highs from one week to the next due to the small number of users.

Lastly, regarding relevance and how well sites in the product suited new users' needs: we could calculate relevance based on content and get an idea of how many good opportunities users might find. However, in the end, each user, company, or site has their own subjective requirements and assessment of what constitutes a good link collaboration. This makes "relevance" something that's significantly out of our direct control.

Takeaways

The entire journey we took to achieve better user activation led us to three main takeaways as a product team:

- Measure everything as soon as possible: This takes us back to the beginning and the realization of the problem. We had a gut feeling that something wasn't right, but only by objectively looking at the metrics did we realize we needed to act immediately. Setting a precise metric as a goal was also extremely helpful.

- Volumes matter for reliable assessment: If user volumes are low, metrics might not be as reliable, and that needs to be kept in mind. High data volatility can make it tricky to see the actual effect.

- Real-product testing is essential: In products like Ranking Raccoon, where users and their sites shape the value delivered, testing the actual product is crucial. Prototypes can be designed with ideal scenarios, but they won't reflect the reality of your live platform.

What's next

We have more product changes planned that stemmed from these efforts and could still positively impact user activation.

One of them is extending the number of pending requests that can be sent during the waiting period. Instead of just a single shot in a focused step, users will be able to craft multiple messages before their site is approved, in their own time.

Lastly, homepage recommendations will include better guidance. Based on the average success rate of the product, we're calculating how many requests users should send for a higher chance of securing a link, and guiding them toward sending that target number for better chances.

Conclusion

Looking back at our activation journey, we learned that getting new users to truly engage means starting by getting to know your goal inside out, then relentlessly digging into why you haven't reached it yet, and finally, being brave enough to try different solutions.

It wasn't always smooth sailing, especially considering the high data volatility and the puzzle of defining user-specific “relevance”. But by staying data-driven, user-centric, and committed to iteration, we not only saw our activation rate climb but also gained invaluable experience. We learned that complex challenges may require painful and slow steps, but can lead to rewarding growth and learning.

Need help getting feedback directly from your users?

At UX studio, we have 10 years of experience in UX research. Go beyond the basics with academically trained researchers fully dedicated to your project.