Does your business really need a chatbot?

As more and more businesses introduce AI chatbots to their products and websites, it may start to feel like a must-have solution. But is it really what users want?

This post helps you decide whether your business needs an AI chatbot, and if so, what it should and shouldn’t do. We’ll look at the two main types of AI chatbots, consider use cases by industry trends, look at the three most common business considerations, and review what tends to be left out of the conversation: the ethical challenges of AI use.

The two main types of AI chatbots

The distinction between a traditional chatbot and an AI interface like ChatGPT is increasingly blurred. Both are designed to support conversational interaction.

ChatGPT and other large language models can be viewed as advanced, multimodal versions of a chatbot, with the key difference being scope: while general-purpose AI systems can engage in open-domain conversation, traditional chatbots typically operate within the boundaries of a specific product or service.

Despite this difference in range, the underlying interaction pattern is similar. This raises a practical consideration for businesses: the need for dedicated chatbots may diminish as AI integrations become capable of covering a wider set of tasks with greater flexibility.

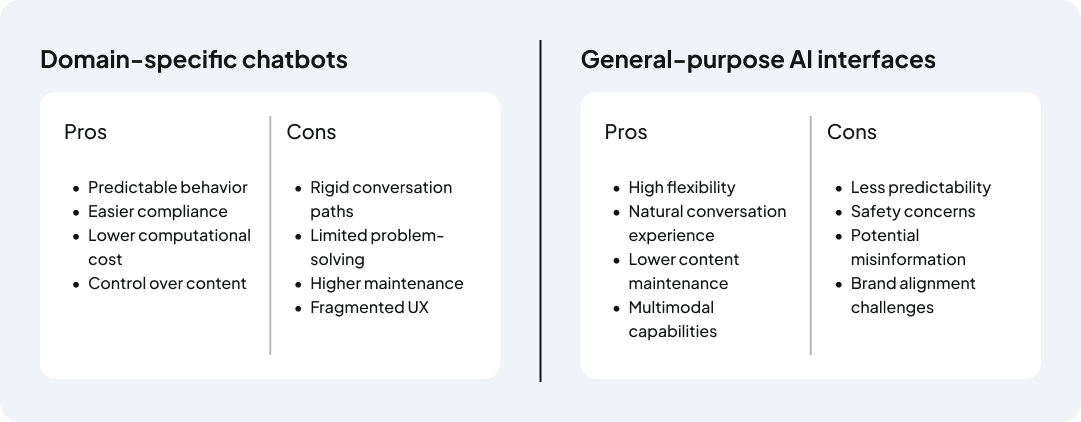

So, consider whether your business needs a tailor-made chatbot trained on specific tasks, or the integration of an existing AI interface.

This difference in capabilities also changes how businesses should think about chatbot strategy in different contexts. The role of conversational systems varies between industries and verticals, because expectations, complexity, and user goals are different.

To help you decide whether a traditional chatbot or an AI-driven interface is the more appropriate solution for you, let’s quickly review which fits what type of business.

Industry trends for chatbot UI

Healthcare

One of the most promising use cases of AI chatbots are in healthcare, as demand for remote care rose in the wake of the COVID-19 pandemic.

Beyond B2C products, such as diagnostic support, self-minitoring, counselling and data collection, B2B chatbots also emerged, mainly focusing on hospital management.

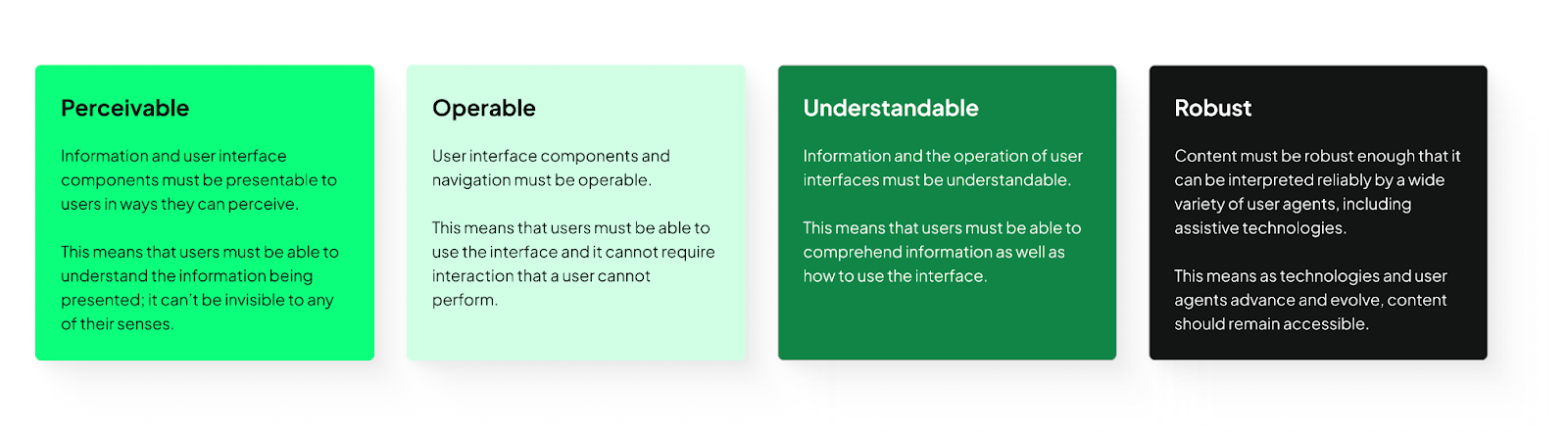

A systematic review by Grassini et al found that the biggest challenge faced by healthcare chatbots is inclusivity, especially with regards to the elderly and disabled patients.

UX/UI design plays a great role in this, as it can significantly improve accessibility on multiple fronts. We recommend using the UX Design Institute's accessibility checklist. The POUR model sums up the main principles.

SaaS

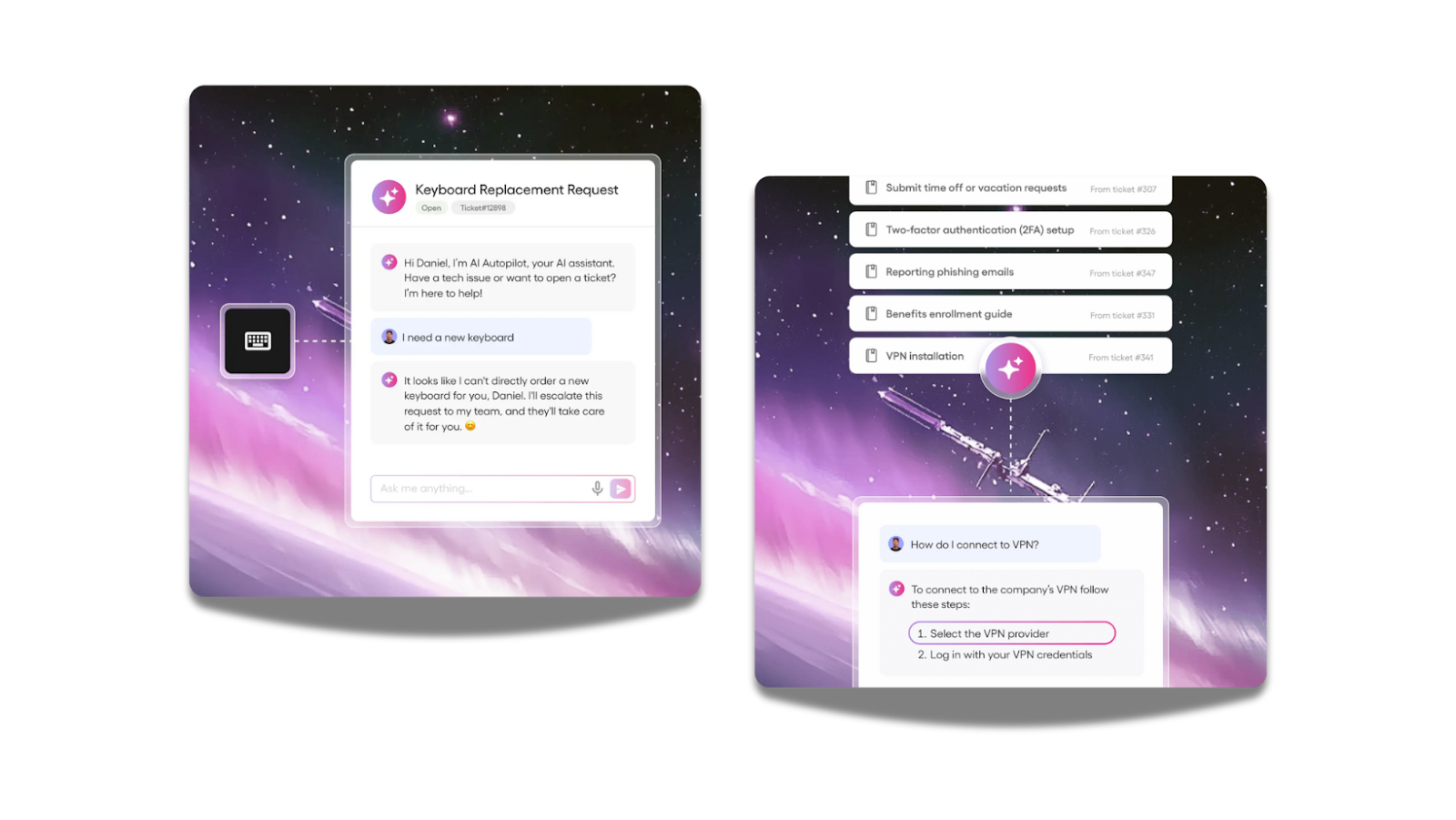

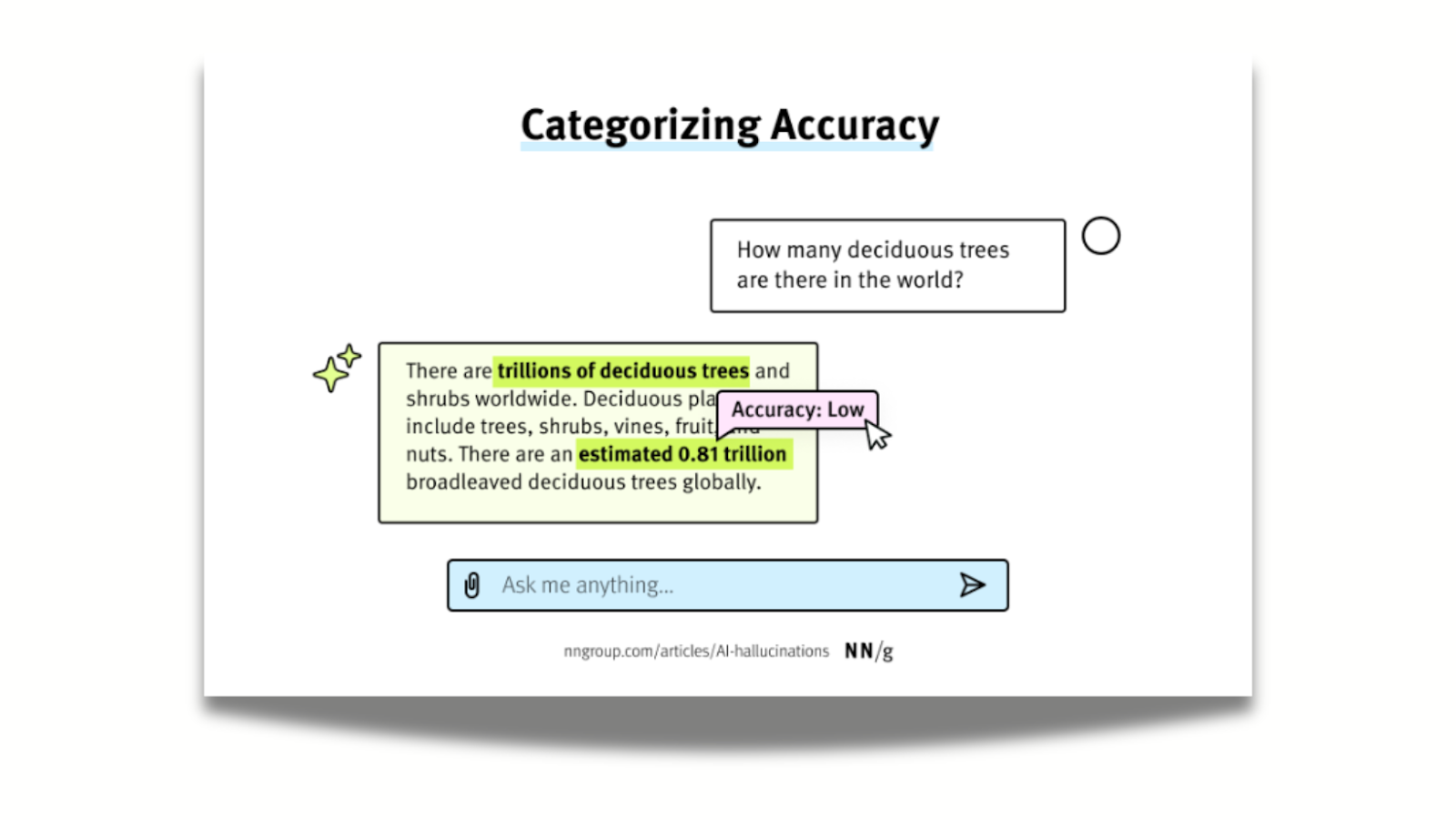

In SaaS products, AI-powered chatbots are reshaping how users interact with software. UX designer Gábor Szabó emphasizes that unlike traditional tools with predictable outputs, chatbots produce variable responses, so UI design must help users navigate this unpredictability.

Features like suggested alternatives, inline edits, and response versioning let users compare outputs and refine results, while framing answers as suggestions builds trust.

Furthermore, clearly labeling AI-generated content, providing concise reasoning snippets, and linking to source documents help users verify responses.

Inline review tools (quick accept, edit, or reject actions) integrate human oversight naturally into workflows, so errors are caught without slowing productivity. This design approach makes interactions more reliable, efficient, and genuinely helpful for business users.

Edtech

Chatbots are increasingly shaping education. Tools like Ivy.ai demonstrate how chatbots can offer 24/7 assistance for university services, from admissions and course selection to financial aid, reducing administrative burden on staff while improving the overall student experience.

For learners, chatbots can act as tutors, answering questions, providing feedback, and guiding them through personalized learning paths, making education more accessible and tailored to individual needs.

Besides opportunities, there are notable risks in this industry as well. UX researcher Anna-Zsófia Csontos from UX studio highlights that unlike generic chatbots, educational chatbots must communicate clearly, provide accurate information, and anticipate a wide range of user abilities and contexts.

Incorporating UX principles ensures that chatbots support intrinsic motivation, foster engagement, and avoid over-reliance on AI for completing tasks.

Features like conversational clarity, contextual hints, adaptive responses, and inclusive language are critical to making the interaction intuitive and effective for diverse learners.

However, ethical challenges remain. Since chatbots often handle sensitive student information, transparency in data usage and adherence to privacy standards are paramount.

Designers must also consider equity, ensuring that all students (regardless of technological access, language proficiency, or learning ability) can benefit from AI support.

General considerations

How do I ensure a chatbot actually helps users?

To build actually helpful chatbots (or decide whether you need one), always consider the user and their context: what do they need? Would a chatbot be able to offer the easiest and simplest solutions?

According to Designnotes, the ideal chatbot query:

- is commonly asked

- leads to one, simple answer or action

- or a small number of tailored answers or actions

Furthermore, the chatbot must be able to describe its own limitations (what it can and can’t help with) and remind users that they’re not talking with an all-knowing entity, but, essentially, a set of code. For this reason, domain-specific chatbots are better suited for most businesses.

How do I integrate a chatbot without breaking my product experience?

Never brute-force assistance. Avoid intrusive UI, but make sure that visitors are aware that they don’t need to leave your site or contact support if they need help, because a built-in helper is available.

Remember that your goal is not to keep users chatting, but to assist them in achieving their desired tasks smoothly. We suggest, though not without bias, to work with UX/UI designers experienced with AI to help you create intuitive experiences.

What’s possible with AI vs what’s feasible?

95% of AI projects fail. Even if the chatbot experience would be a small bit of your product, make sure you can effectively prioritize tasks relating to building and maintaining it.

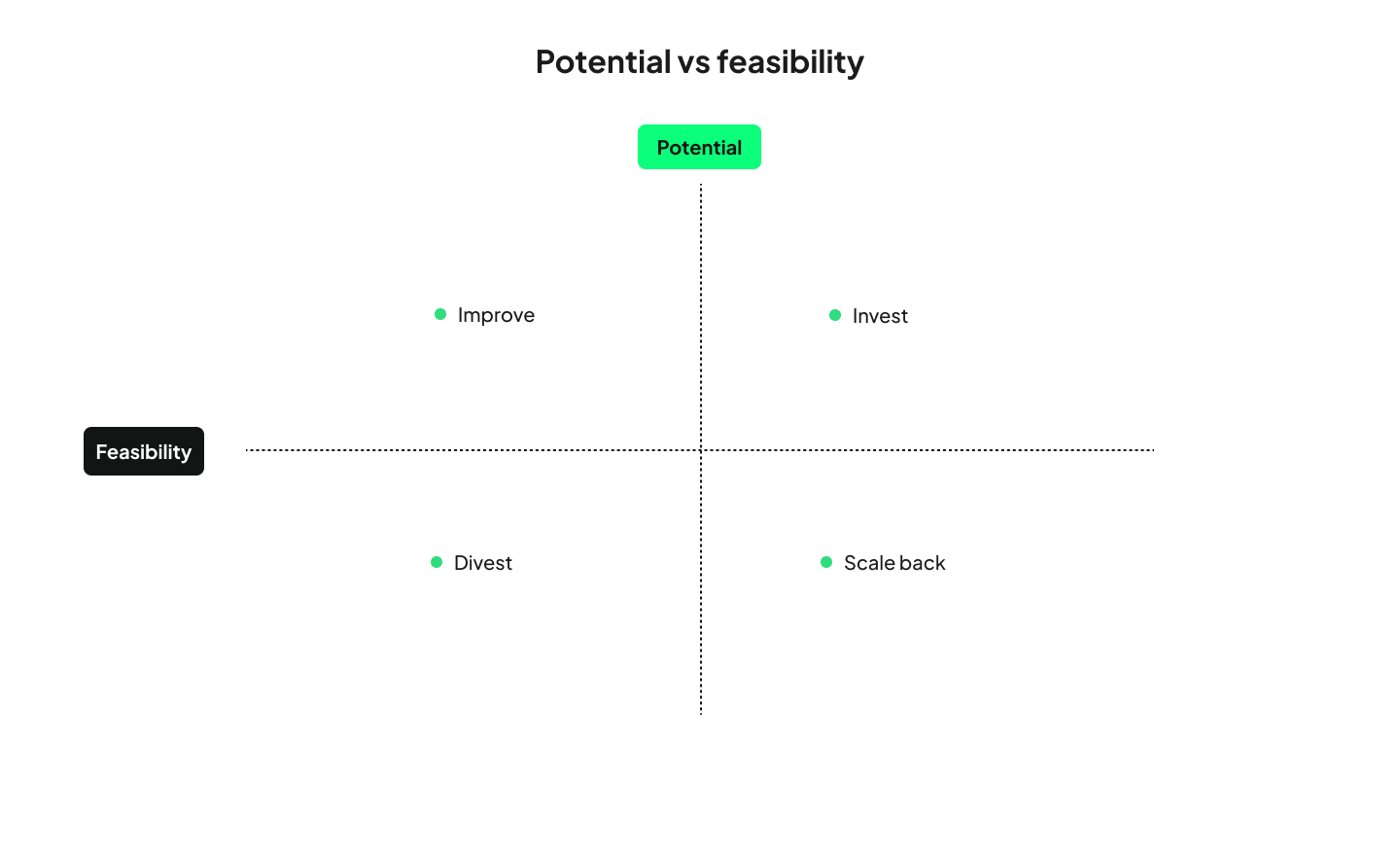

Skim Technologies suggests using a potential vs. feasibility Matrix as a way to cut through internal noise. By involving all departments and evaluating AI ideas on business impact and execution readiness, organizations can make smarter decisions.

The matrix outlines four paths (Invest, Improve, Scale back, or Divest) each guiding how much time, money, and effort a project deserves. Beyond choosing new initiatives, the framework is also valuable for reassessing existing AI projects that aren’t delivering expected results and adjusting course before scaling to production.

Ethical challenges & how to respond

While implementing AI solutions has strong business incentives, especially from shareholders, and can improve the user experience, the fledging technology poses several threats.

1. Antromorphism

Many guides encourage designers and business owners to have their AI chatbots mimic human behavior or even use avatars. However, according to the Harvard Business Review, this is not what most users want.

Humanizing AI bots is also not without risk, and exposes users to emerging threats like chatbot psychosis. Far from edge-cases, even workplace environments are affected. In extreme cases, AI chatbots may even promote violence towards the self or others through exploiting user trust.

Chatbot design, down to the UI, needs to put safeguards in place, and limit deep emotional attachment, over-reliance, and the opportunity for derailed conversations. Making the chatbot feel more like a machine is a step in this direction, so interactions feel less intimate and leave less room for harm.

2. Sustainability

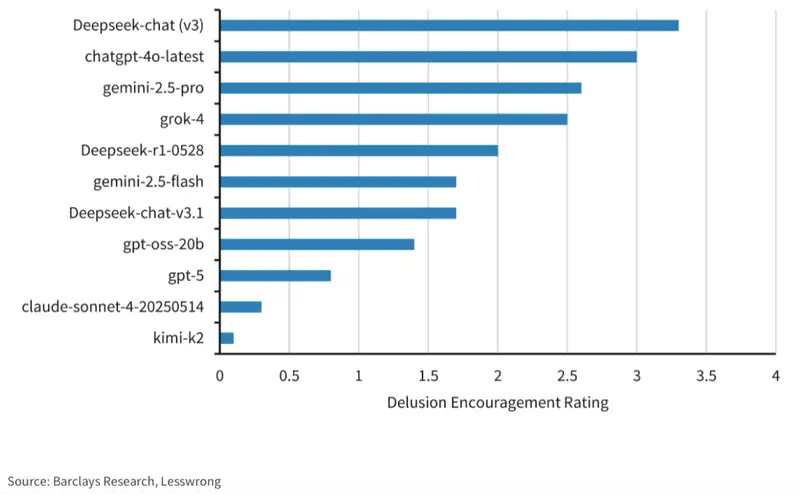

AI poses a serious environmental threat with the massive energy and water consumption of data centers. Research suggests that training just one natural language processing model can emit over 600,000 pounds of CO₂, which is nearly five times what a typical car produces across its entire lifespan. EY’s researchers further highlight that regulations are just catching up, and sometimes fail to account for the entire AI supply chain.

Environmentally conscious users may refuse to engage with AI-powered products or switch to competitors who use a more sustainable option.

You can reduce the environmental impact of AI by choosing renewable-powered providers, using energy-efficient or pretrained models, optimizing data and architecture, and relying on serverless or AI-optimized hardware.

3. Copyright violations

Large Language Models were trained on content with no regard to intellectual property. While liability falls on the model creator, if your business uses an integration of such a dataset, you may find yourself in hot water.

For example, ChatGPT’s Studio Ghibli filter was embraced by some businesses, but caused backlash from the general public for mimicking the beloved animation studio's hand-drawn style without their consent.

Ensure legal and IP safety by verifying training data, protecting proprietary information when using AI tools, and monitoring outputs to prevent IP exposure or infringement.

4. Potential of misuse

AI chatbots, including any chatbot your business uses, can be used by malicious actors for phishing and cyber attacks.

IBM advises making AI governance an enterprise priority, and provides practical tips on security risks, which include defining a clear strategy, identifying vulnerabilities through risk assessments and adversarial testing, protecting training data with secure-by-design practices, and improving organizational preparedness through cyber response training.

5. Trust issues

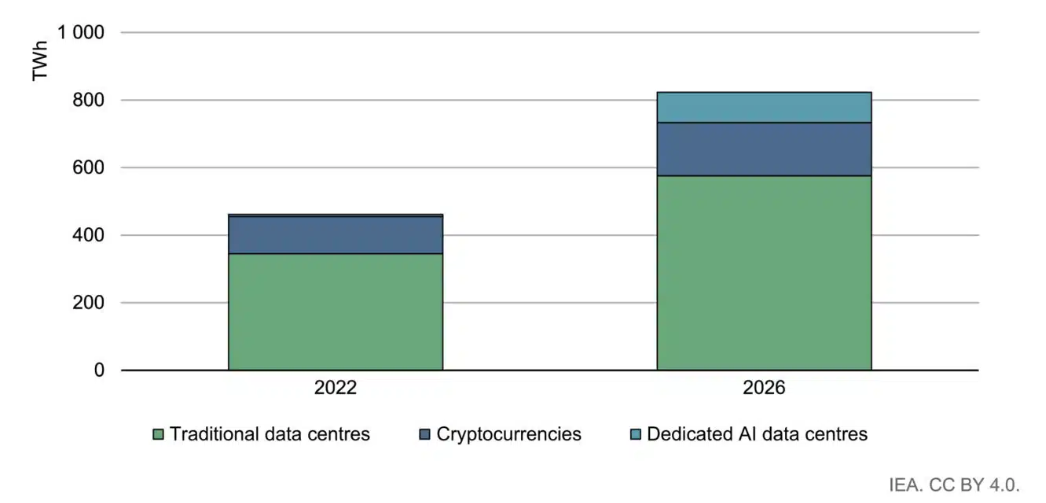

AI model’s tendency to confidently share misinformation or fall back on the inevitable biases of a model favoring statistical averages made many users tentative to use them.

While 78% of companies use AI in some function, research shows that marketing products as “AI-powered” can actually reduce purchase intent.

Forbes attributes this to emotional reactions, fear of errors and privacy issues chief among them.

We collected the best UX design practices to build trust in AI products while remaining transparent. The gist is, you can promote trust by:

- using explainable-AI techniques and tools;

- enforcing governance and review standards;

- educating users;

- verifying information;

- maintaining high-quality and well-tested models;

- ensuring ongoing human oversight,

- and staying current on emerging risks.

Main takeaways

As AI technology evolves, so do user expectations. Over-automating systems and removing human support is a misstep, and trust issues are abundant.

Improving functionality through development is the first step. Then UX/UI design experts can offer meaningful help to build chatbot features users actually want, based on solid research.

To get started, we recommend a UX audit. This low-commitment research method helps you make quick improvements and identify missed opportunities. Meet your users where they are and book a consultation with UX studio.