What’s next for UX research? 2026 trends and predictions

In just a few short years, UX research has gone from lab studies and sticky notes to prompt engineering, real-time data visualizations, and AI-generated insights. And it’s not slowing down anytime soon.

What we at UX studio observe, along with many other professionals involved in creating digital products, is that UX researchers are rising to meet these challenges head-on. They're discovering innovative, responsible ways to embrace new technologies, streamline their workflows, and deliver better results without compromising quality.

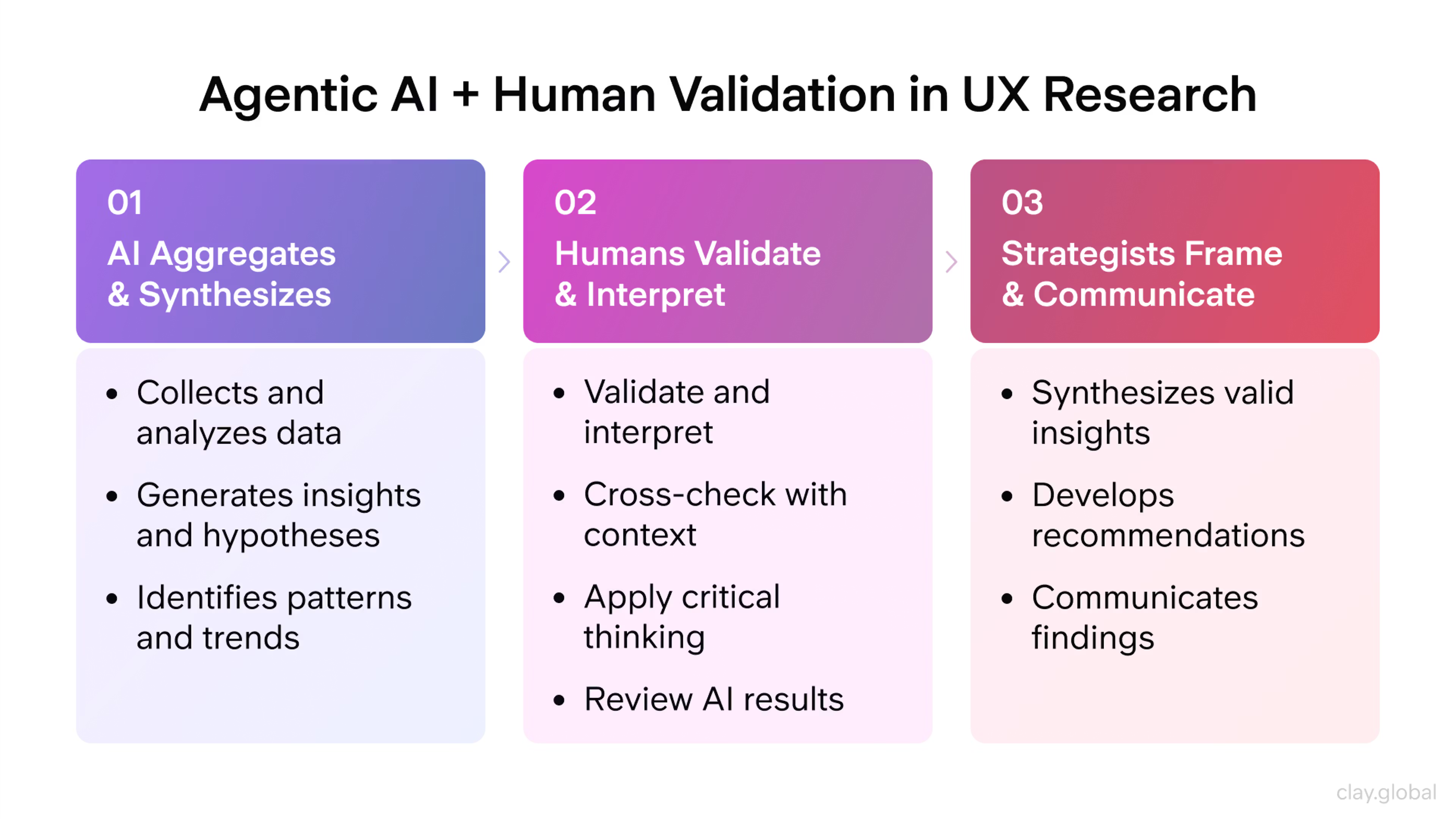

Researchers are particularly focused on adapting to the biggest shift in our field—the integration of AI in all its forms: automating research processes, acting as research assistants, accelerating synthesis and analysis, enabling synthetic users, and much more, but without cutting corners.

In this article, we explore the top trends shaping UX research in 2026, examine the challenges ahead, and discuss how researchers can remain effective, ethical, and adaptable in this rapidly evolving landscape.

Top 8 trends for the field of UX research

1. AI as a UX Research Assistant

We've heard from fellow UXers countless times that AI doesn't have to replace researchers, and it's not designed to. Instead, it can:

- Save significant time on routine tasks

- Complete 80% of the work, allowing you to add the crucial 20% with nuanced interpretation based on your experience and expertise

For example, to really bring out the best results from AI for analysis, researchers need to be methodical and experiment with optimal prompting techniques when using general LLMs like ChatGPT, Gemini, or Claude.

While this approach remains valid, researchers are now sharing their prompt engineering strategies and identifying which models work best for different types of synthesis. These tools are often available for free, you just need to invest the effort to reach that optimal performance level where you're satisfied with the results. Behind many AI-powered research tools are carefully crafted and evaluated prompts, the work done and refined by others before you, so that’s the alternative route you can take.

However, AI won't help much with forging organizational relationships, disseminating insights, engaging stakeholders, or navigating internal politics—the fundamentally human aspects of research.

As Zsombor Várnagy-Tóth argues, it has simply not been feasible for human researchers to process vast amounts of user-generated content—posts and comments in forums, social media, thousands of reviews, or relevant scientific literature. This is why AI assistance for secondary research, including literature reviews, competitor analysis, and social listening, has become essential for our team and many others.

We at UX studio don't share the pessimistic view that suggests UX research will disappear as a profession or become obsolete in the near future. Instead, we believe that while AI will automate many routine tasks, the strategic thinking, stakeholder management, and deep user advocacy that experienced researchers provide will become even more valuable.

AI helps researchers quickly gather background and domain information for new projects, allowing them to start much further along in their understanding by the time primary research begins.

At UX Studio, we recommend using LLMs for general secondary research and specialized AI research tools like Elicit for literature reviews. For more comprehensive benchmarking studies, Nuance Behavior AI Lab's report comparing over 20 literature review tools provides valuable insights.

Looking ahead, we may see AI researchers conducting research sessions with AI agents or synthetic users—a fascinating development that's closer than many think.

Our take-away: AI growth will continue accelerating. Use it to your advantage to speed up work and process volumes of logs, data, and online information that would be impossible to handle with human processing power alone. Leverage AI for brainstorming research plans, research operations support, and even sophisticated statistical analysis.

2. Speeding up and automating low-expertise busy-work

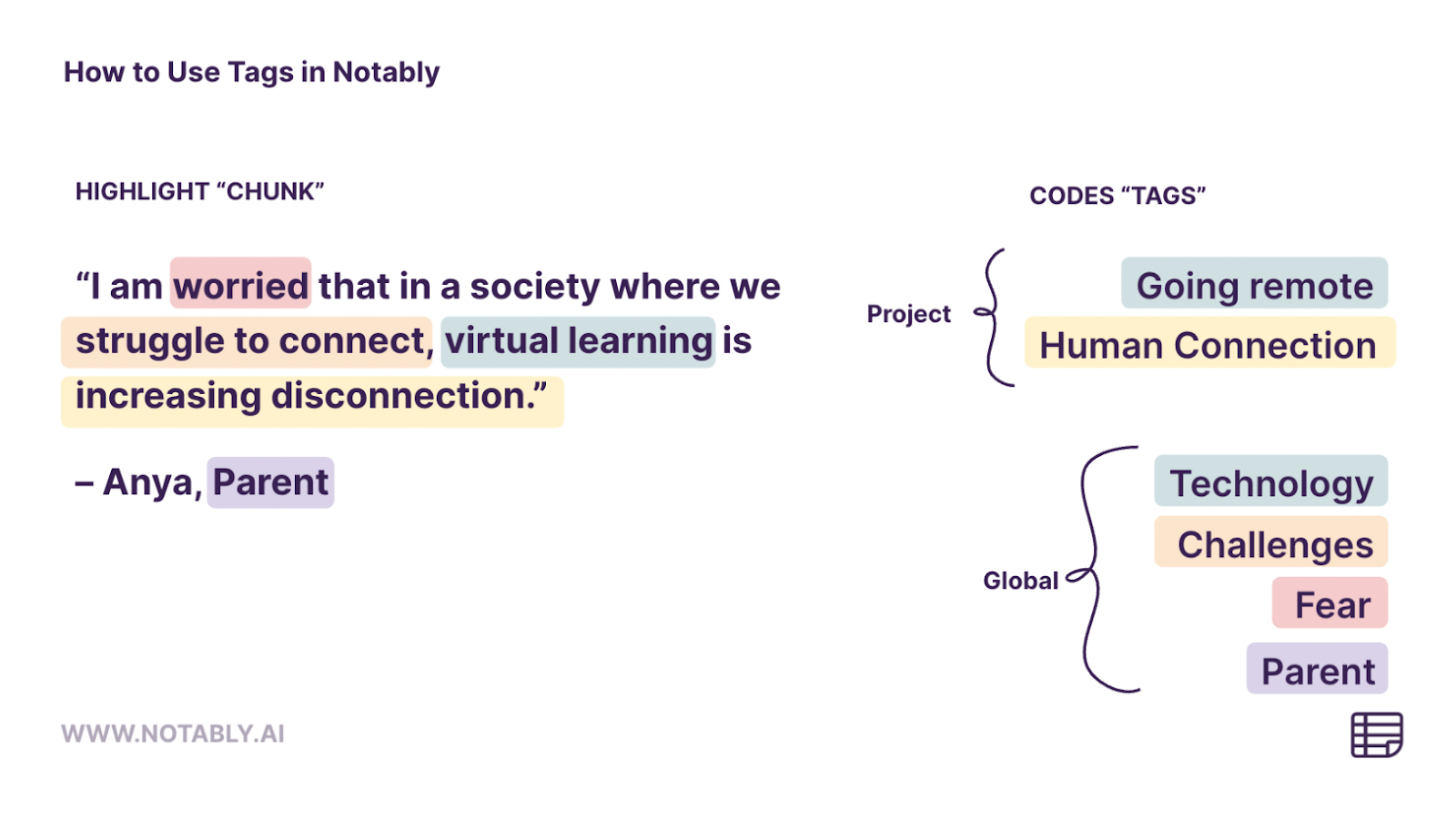

In 2026, many tasks that once took hours of manual work will become even easier to automate for researchers who still do it manually (who, according to our data, are in the minority). Tagging qualitative data, summarizing transcripts, and generating insight drafts can often be handled by AI tools. This means researchers are freed up to focus on higher-level thinking and strategy.

This shift is changing how research fits into product workflows. With faster turnaround, teams expect insights to be available earlier in the process. Researchers are adapting by working more closely with designers, PMs, and engineers, and learning how to deliver value at a new pace.

Our take-away: While data plays a crucial role in product decisions, we believe our experience, expertise, and user advocacy will remain essential for steering companies in the right direction and ensuring they do right by users and customers.

3. Researching AI: new products, new questions

At UX Studio, one of our primary goals is making AI genuinely work for people in real-world scenarios, not just in polished demo videos or marketing materials. This means finding the right balance: pushing automation to its limits while ensuring the experience remains intuitive, helpful, and grounded in actual user needs.

This approach becomes increasingly important as AI features are popping up everywhere: in some products intentionally and thoughtfully, in others, arbitrarily. From smart suggestions to fully integrated co-pilots, researchers are being asked to evaluate not just usability, but also trust, comprehension, and perceived value. What makes an AI tool feel useful and effortless rather than confusing or intrusive? When does automation cross the line into feeling uncomfortable around its “presence”?

The recently founded Behavioral AI Institute is working to make AI systems more human-centered, context-aware, and ethically aligned. Their open letter highlights the risks of building AI that misunderstands how people actually think and behave, calling for a more interdisciplinary, user-focused approach to design and evaluation.

Our take-away: For UX researchers, this means developing skills to test prompt quality, mental models, and emotional reactions. It's not sufficient to ensure that AI functions correctly. It’s about making sure people feel good using it.

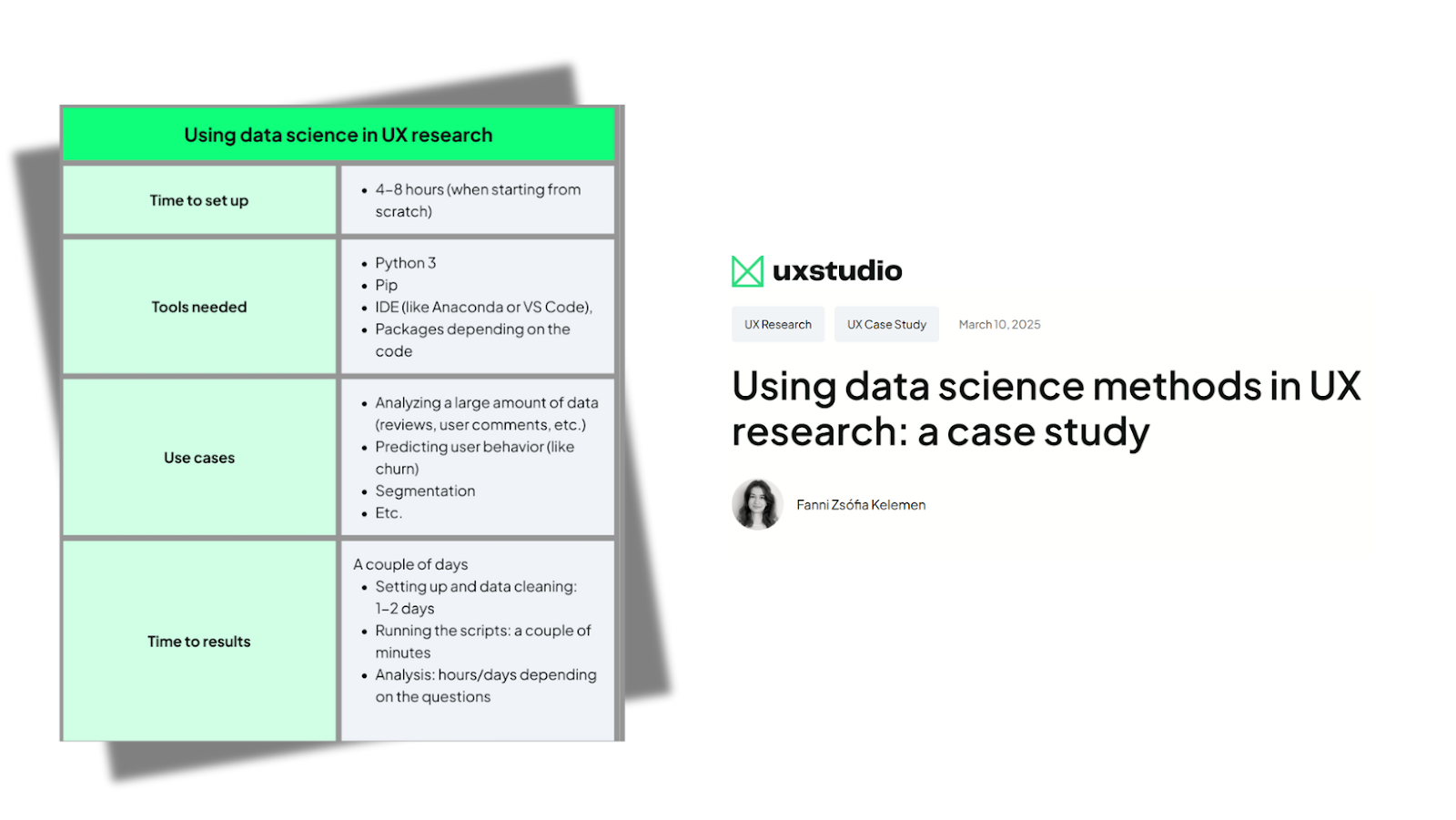

4. Using data science in UX research

Lower barriers to entry and AI assistance mean researchers no longer need advanced degrees or Python programming skills to perform sophisticated analyses like sentiment analysis on survey responses.

We documented how our team successfully used thematic analysis of free-text survey responses to deliver high-value insights without formal statistical training. Team members who pioneered these approaches shared their experiences with our broader research team, enabling everyone to apply these methods when they would benefit product decisions.

You can find our step-by-step guide in our previous publications, demonstrating how accessible these once-intimidating techniques have become.

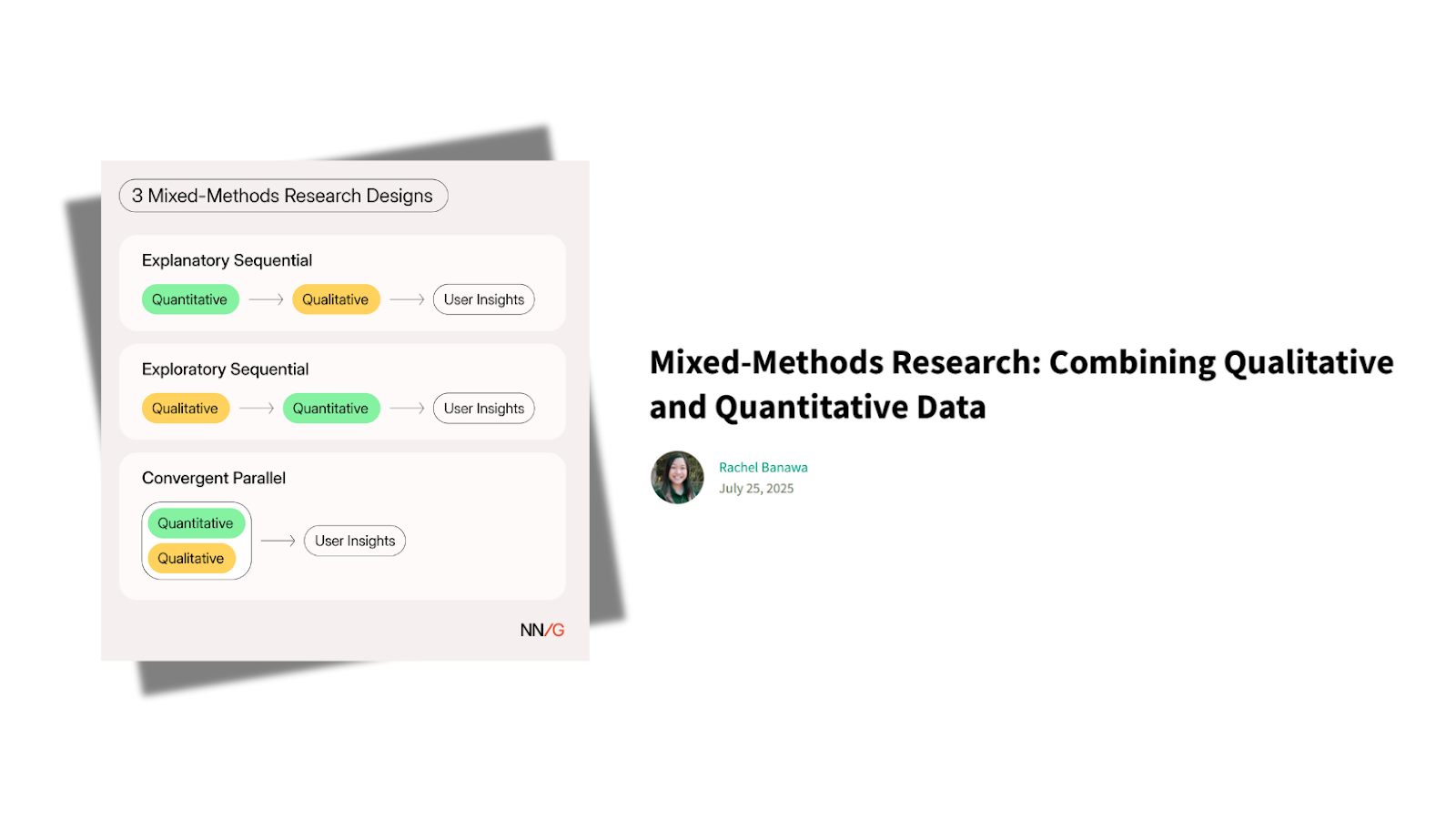

6. Mixed methods research: going beyond parallel studies

Mixed-methods research—the intentional integration of qualitative and quantitative methods within single research projects—is becoming standard practice rather than an exception. This approach extends far beyond simply conducting surveys alongside user interviews: it involves carefully planned integration of different research methods to answer the same overarching research question.

As Trevor Calabro explains, most teams claim they're doing mixed methods when they're really just running two separate studies. True mixed-methods research requires intentional structure where the methods inform each other, not just stacking findings together after data collection.

The power of mixed-methods lies in its ability to provide both scale and depth: measurable patterns alongside rich context. Quantitative methods reveal what's happening and to how many people, while qualitative methods explain why it's happening and what it means to users.

Three primary mixed-methods designs are emerging in UX practice:

- Exploratory Sequential Design starts with qualitative research to explore a problem space, followed by quantitative research to test and validate findings at scale. This approach works well when you don't yet know the key variables or behaviors you should be measuring

- Explanatory Sequential Design begins with quantitative research to identify patterns, followed by qualitative research to explain those patterns. This is particularly valuable when metrics point to problems but don't reveal underlying causes.

- Convergent Parallel Design involves collecting qualitative and quantitative data simultaneously, then integrating the findings during analysis to create comprehensive understanding. This approach is effective when you need both perspectives to make informed decisions under tight timelines.

The key to successful mixed-methods research is integration: not just collecting different types of data, but systematically connecting them to generate insights that neither method could provide alone. This requires careful planning, aligned sample sizes, and predetermined integration points before data collection begins

Our take-away: Mixed-methods research represents the maturation of UX research as a discipline. As teams demand more comprehensive insights and faster turnaround, researchers who can systematically integrate different methods will deliver more actionable and convincing findings.

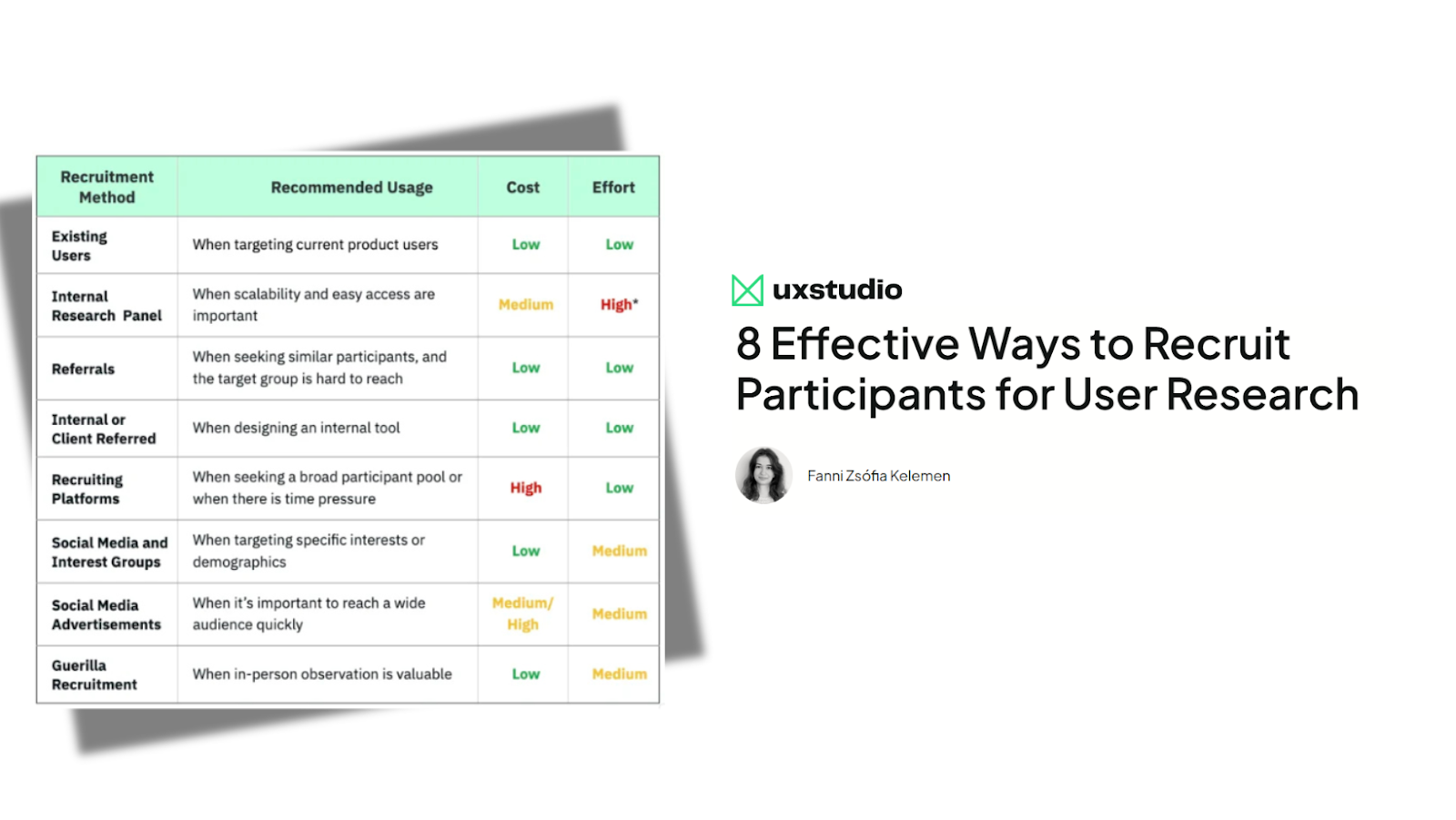

6. Ensuring authenticity: new strategies for participant quality

Recruiting the right participants is often the hardest, most costly part of UX research—but it's also the most vital. Generic panels and convenience samples usually fail to capture the nuance and credibility needed for authentic insight. Many panelists are professional testers who deliver polished but rehearsed responses that don’t reflect real decisions or experiences.

For niche audiences, like CISOs, clinicians, or enterprise decision-makers, the challenge is even greater. These people don’t live on survey panels. They’re hard to reach, guarded, and often unimpressed by generic outreach or incentives. They require thoughtful screening, credible approaches (such as LinkedIn outreach, referral-based recruitment, or specialist vendors), and sometimes in-person engagement to build trust.

Companies involved in market-, customer-, and UX research, like ImpactSense, emphasise authenticity at scale. Their globally verified panel of over 45 million participants across 97+ countries uses AI-enhanced and human quality-control processes to eliminate bots, frauds, and repeat respondents. According to their findings, up to 83% of researchers encounter inauthentic respondents during sessions and bot-generated response rates can reach 60% in quant surveys. Often, artificial or fake data is worse than no data at all.

Based on our experience at UX studio, too, when participants are hard to reach—and therefore more selective—they often provide more honest, nuanced, and valuable feedback. Even if recruitment takes more effort, the insights are worth it. Our prediction: labor-intensive, in-person moderated interviews and contextual inquiry will see a thoughtful revival—especially for niche, sensitive, or specialized contexts.

We've also noticed people bringing AI into sessions. Some interviewees have used large language models to form answers. But when researchers are fully present, trained to focus, and aware, spotting AI-generated responses is usually straightforward. In those cases, it's acceptable to pause or cancel the session. You can also block copy-paste in survey forms.

Our take-away: Authenticity is the foundation of credible, actionable research. Take your time with careful screening: it will result in avoiding time, effort, incentives, and hours wasted collecting and analysing data from bots, or participants misrepresenting themselves.

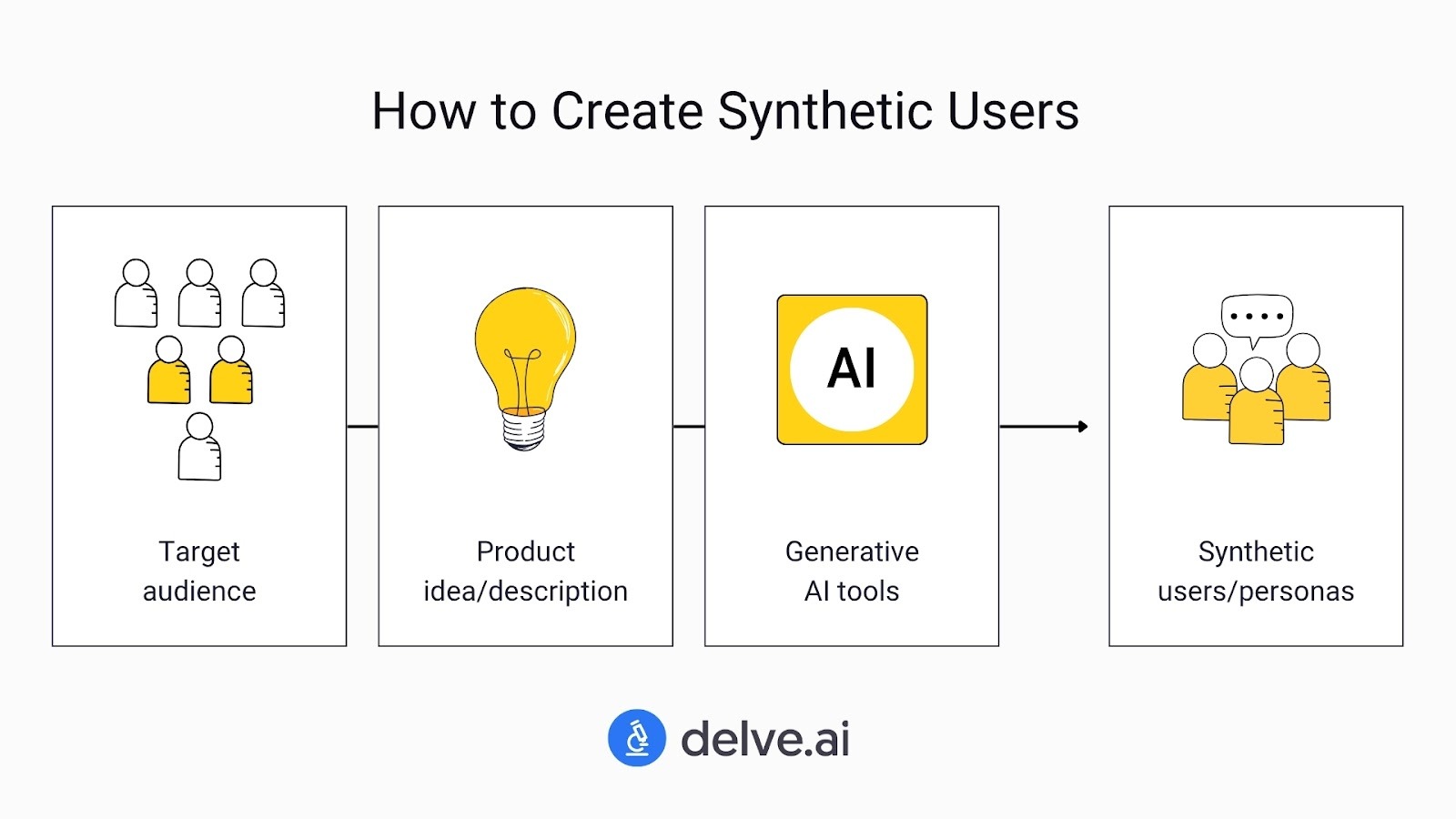

6. Synthetic users

Synthetic users, AI-generated stand-ins built from real data, are becoming a serious part of the UX research toolkit. They promise speed, cost savings, and scalable insights, making them especially appealing for B2B products, early-stage startups, and hard-to-reach user segments.

In a climate where UX research budgets are under pressure, decision-makers are understandably drawn to options that can deliver early signals quickly and affordably.

A growing number of studies suggest potential. A Stanford study showed that synthetic agents can mimic human responses with up to 85% accuracy. But researchers are running their own experiments to understand what this actually means in practice.

For example, a Bayesian analysis by Aaron Gardony found that synthetic users tend to respond with much more internal consistency than real people. While humans often express contradictions, confusion, or hesitation—especially when dealing with emotionally charged or unfamiliar decisions—AI-generated users deliver predictable, clean responses.

That consistency might be helpful for early-stage research. It can speed up hypothesis testing and help teams quickly explore feature preferences, value props, or messaging. But it also raises an important question: are these personas simply presenting stereotypes of user pain points, or are they capturing the nuance of real lived experience? When synthetic users describe the experience of a parent juggling three jobs or a stressed-out professional in a high-stakes role, how do we know if it’s grounded insight or a shortcut?

Even highly accurate models won’t replicate moments of silence, resistance, or discomfort that often make qualitative research so valuable. Real users don’t always answer cleanly, and that friction is often where the real insight lives.

At UX studio, we’ve started experimenting with synthetic users across different tools, platforms, and levels of data input. We see their value as signal generators, especially when recruiting is slow or access to niche users is limited. But we also recognize their limitations. Some decisions require nuance, emotional depth, and a full understanding of context. You don’t get that by only listening to AI.

Thought leaders in UX research are making similar observations. In this article by Sara Stivers, she outlines both the opportunity and the risk of relying too heavily on synthetic input. While these tools are growing in popularity, they should not replace real users—especially when the stakes are high and human behavior is unpredictable.

Our take-away: Use synthetic users to support early signals and ideation, especially when resources are limited. But they are not a shortcut for understanding real people. Feed research data gathered directly from your target audience to the tool you use for simulating users, and keep complementing data from synthetic users with findings from your own recruits.

7. Personalization at scale

Without AI, few companies could deliver truly personalized experiences at scale. A compelling example from the digital health behavior change space is Lirio, which uses customer data to provide hyper-personalized health communication to each individual—an "N of 1" approach.

While this level of personalization isn't feasible or sensible for all digital companies, there's a clear trend away from one-size-fits-all design toward experiences that adapt to users' goals and preferences without requiring extensive manual customization.

Many applications now begin with onboarding assessments, using responses to personalize dashboards, goals, notifications, design elements, and the overall user experience. This approach recognizes that user needs vary significantly and that successful products must accommodate this diversity.

Our take-away: Really dive deep into understanding users’ needs, goals and motivations so that you can provide meaningful personalization. Pair that with quantitative and behavioral data, and translate them into actionable design decisions and your users and business stakeholders will thank you.

+1: Not letting UX Research and its methods become buzzwords

Amidst all trends I see researchers in our team, and in professional communities like Linkedin and Slack being thoughtful and fighting for keeping UX research relevant, rigorous, meaningful, and impactful.

This with all the expectations shifting, product teams hastily releasing AI features, and mass layoffs still affecting the profession, is even more prominent as researchers need to keep proving themselves and the value of their work.